well PI seems to be looking for how much physical memory there is so even if a bunch is already allocated it seems like ImageIntegration is not going to take into account how much virtual memory is already used. that's why i asked. but if there's no disk usage at all then i guess it can't be the problem. at any rate it should not thrash since the JS engine is never going to try to access anything while ImageIntegration is running, but i figured there might be some paging penalty along the way.

Hi rob,

umh, sorry... as I observed myself, PI is keen in looking and taking into account how much virtual memory is already used.

Due to running PI on a rather slow processor (i7-2600, 4x2 logical processors) still today, I am very interested on usage of system resources and like to have xosview (yes, running PI on Linux) showing e.g. usage of CPU, memory, disk i/o, swap, paging activity, ints and IRQs while I let run several image processing steps w/ not so much image files (e.g. only 40 to 50), but each of the files around 500MB in size.

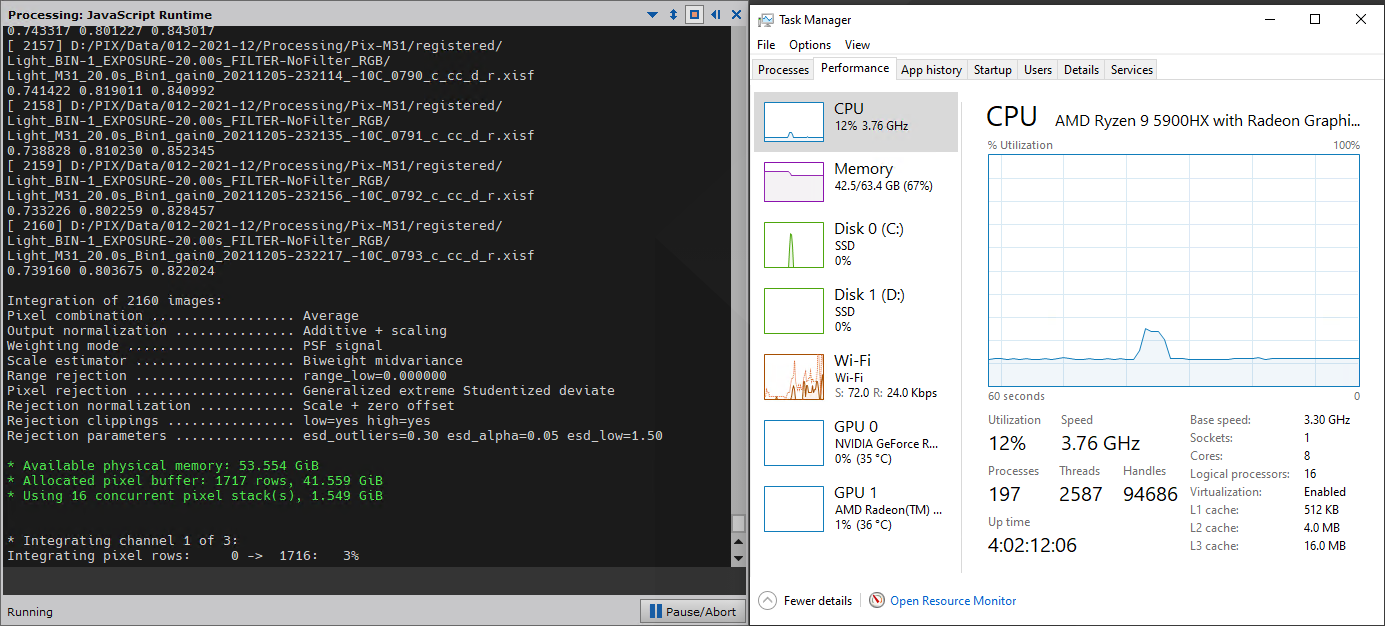

When running PI on a rather fast Ryzen 4700U w/ only 16GB of RAM, I often got a message on memory constraints and thus reduced processing threads. But these messages only show up once, and are scrolled away fast (and that's why I set maximum number of console lines to 16000, to get a clue about problem reasons ;-) ).

I never saw memory constraints on the i7 w/ 32GB of RAM, however. Sounds like 4GB/processor is a fair relation, and fullfilled by the Ryzen 9 shown above, but not with the Ryzen 9 3950X 32 logicals w/ "only" 64GB shown initially by gkunz.

Projecting with a 16 core (and thus ideally 32 logicals) processor for my next number cruncher, I would go for 128GB at least ;-)

But enough of tales at this point...

What I missed in the discussion up to now: did you ever inspect the console log for messages, indicating the reason or giving a clue why reduce CPU happens ?

Kind regards

Martin