StarAlignment: New Pixel Interpolation Algorithms

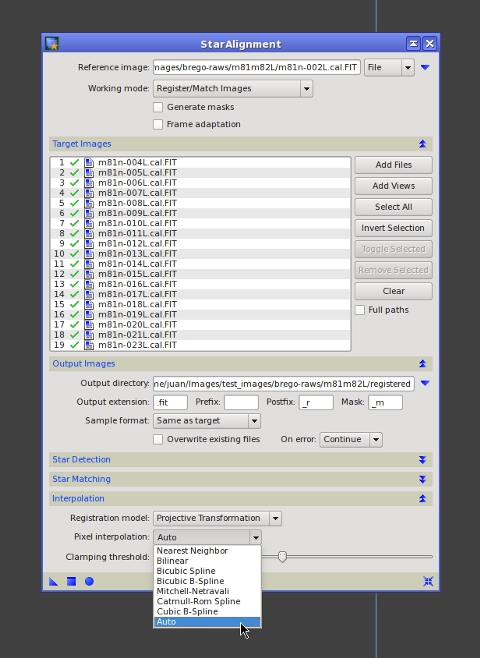

The StarAlignment tool released with PixInsight 1.6.1 provides the full set of pixel interpolation algorithms available on the PixInsight/PCL platform, as can be seen on the screenshot below.

Automatic

This is the default interpolation mode. In this mode StarAlignment will select the following interpolation algorithms as a function of the rescaling involved in the registration geometrical transformation:

- Cubic B-spline filter interpolation when the scaling factor is below 0.25 approximately.

- Mitchell-Netravali filter interpolation for scaling factors between 0.6 and 0.25 approx.

- Bicubic spline interpolation in the rest of cases: from moderate size reductions to no rescaling or rescaling up.

Bicubic spline

This is, in general, the most accurate pixel interpolation algorithm available for image registration on the PixInsight/PCL platform. In most cases this algorithm will yield the best results in terms of preservation of original image features and accuracy of subpixel registration. When this interpolation is selected (either explicitly or automatically), a linear clamping mechanism is used to prevent oscillations of the cubic interpolation polynomials in presence of jump discontinuities. Linear clamping is controlled with the linear clamping threshold parameter.

Bilinear interpolation

This interpolation can be useful to register low SNR linear images, in the rare cases where bicubic spline interpolation generates too strong oscillations between noisy pixels that can't be avoided completely with the linear clamping feature.

Cubic filter interpolations

These include: Mitchell-Netravali, Catmull-Rom spline, and cubic B-spline. These interpolation algorithms provide higher smoothness and subsampling accuracy that can be necessary when the registration transformation involves relatively strong size reductions.

Nearest neighbor

This is the simplest possible pixel interpolation method. It always produces the worst results, especially in terms of registration accuracy, and discontinuities due to the simplistic interpolation scheme. However, nearest neighbor preserves the original noise distribution in the registered images, in absence of scaling, a property that can be useful in some image analysis applications. Nearest neighbor is not recommended for production work mainly because it does not provide subpixel registration accuracy.

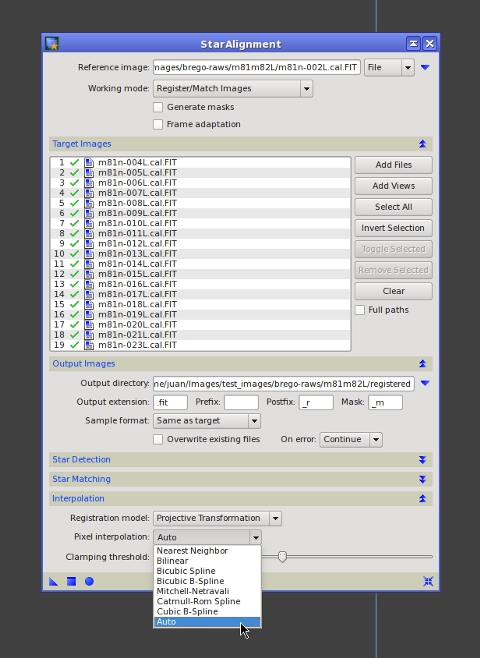

The StarAlignment tool released with PixInsight 1.6.1 provides the full set of pixel interpolation algorithms available on the PixInsight/PCL platform, as can be seen on the screenshot below.

Automatic

This is the default interpolation mode. In this mode StarAlignment will select the following interpolation algorithms as a function of the rescaling involved in the registration geometrical transformation:

- Cubic B-spline filter interpolation when the scaling factor is below 0.25 approximately.

- Mitchell-Netravali filter interpolation for scaling factors between 0.6 and 0.25 approx.

- Bicubic spline interpolation in the rest of cases: from moderate size reductions to no rescaling or rescaling up.

Bicubic spline

This is, in general, the most accurate pixel interpolation algorithm available for image registration on the PixInsight/PCL platform. In most cases this algorithm will yield the best results in terms of preservation of original image features and accuracy of subpixel registration. When this interpolation is selected (either explicitly or automatically), a linear clamping mechanism is used to prevent oscillations of the cubic interpolation polynomials in presence of jump discontinuities. Linear clamping is controlled with the linear clamping threshold parameter.

Bilinear interpolation

This interpolation can be useful to register low SNR linear images, in the rare cases where bicubic spline interpolation generates too strong oscillations between noisy pixels that can't be avoided completely with the linear clamping feature.

Cubic filter interpolations

These include: Mitchell-Netravali, Catmull-Rom spline, and cubic B-spline. These interpolation algorithms provide higher smoothness and subsampling accuracy that can be necessary when the registration transformation involves relatively strong size reductions.

Nearest neighbor

This is the simplest possible pixel interpolation method. It always produces the worst results, especially in terms of registration accuracy, and discontinuities due to the simplistic interpolation scheme. However, nearest neighbor preserves the original noise distribution in the registered images, in absence of scaling, a property that can be useful in some image analysis applications. Nearest neighbor is not recommended for production work mainly because it does not provide subpixel registration accuracy.