NOTE -- This thread is obsolete - for up-to-date information on the new linear fit clipping pixel rejection algorithm please go to this thread.

Sorry for the inconvenience.

Hi there,

The new version of the ImageIntegration tool that comes with PixInsight 1.6.1 implements a new pixel rejection algorithm: RANSAC linear fitting. This is the first of a series of improvements in this essential tool, which will be introduced during the 1.6.x cycle.

The random sample consensus (RANSAC) algorithm is already being used in PixInsight with great success. The StarAlignment tool implements a sophisticated RANSAC procedure as the final stage of its star matching engine. The main advantage of RANSAC is its robustness. In the case of the new rejection method implemented in ImageIntegration, RANSAC is used to fit the best possible straight line (in the twofold sense of minimizing average deviation and maximizing inliers) to the set of pixel values of each pixel stack. At the same time, all pixels that don't agree with the fitted line (to within user-defined tolerances) are considered as outliers and thus rejected to enter the final integrated image.

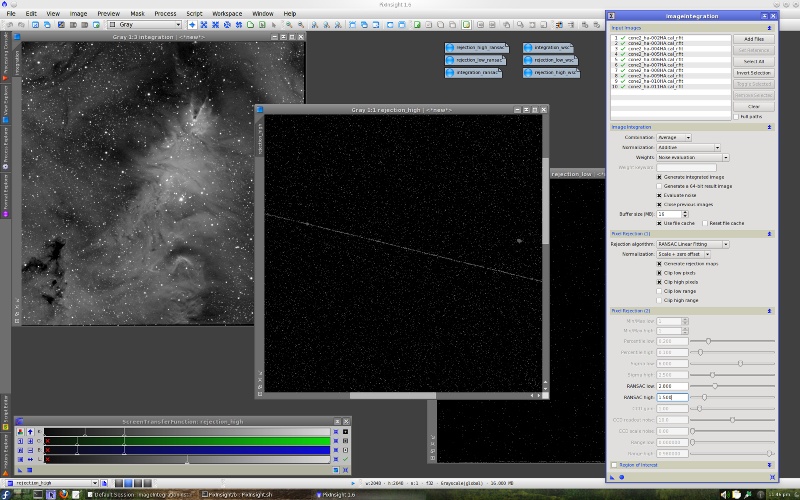

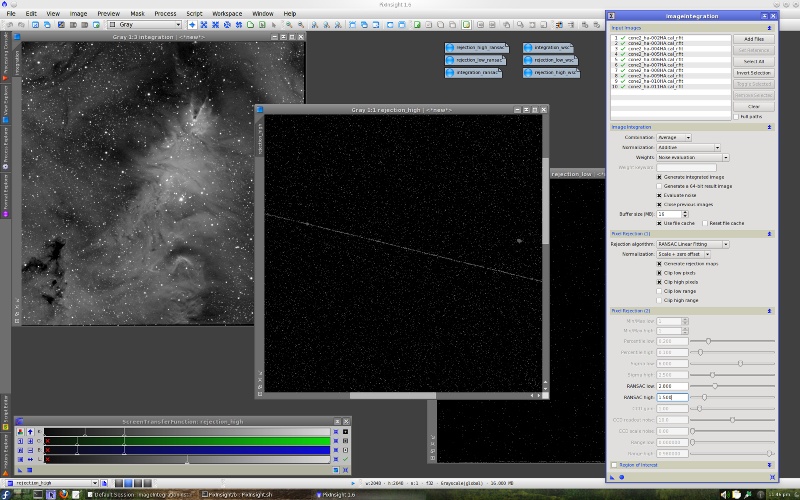

The new pixel rejection algorithm is controlled, as usual, with two dedicated threshold parameters in sigma units: RANSAC low and RANSAC high, respectively for low and high pixels. The screenshot below shows the new version of ImageIntegration and some results of the new rejection method.

Click on the image for a full size version

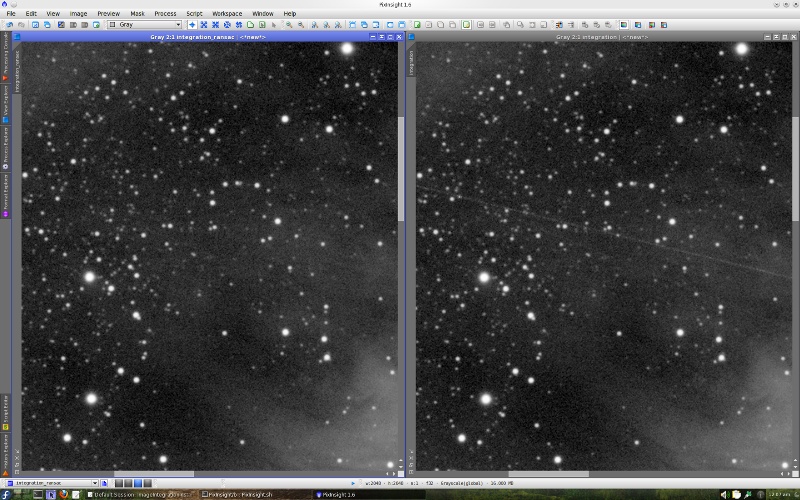

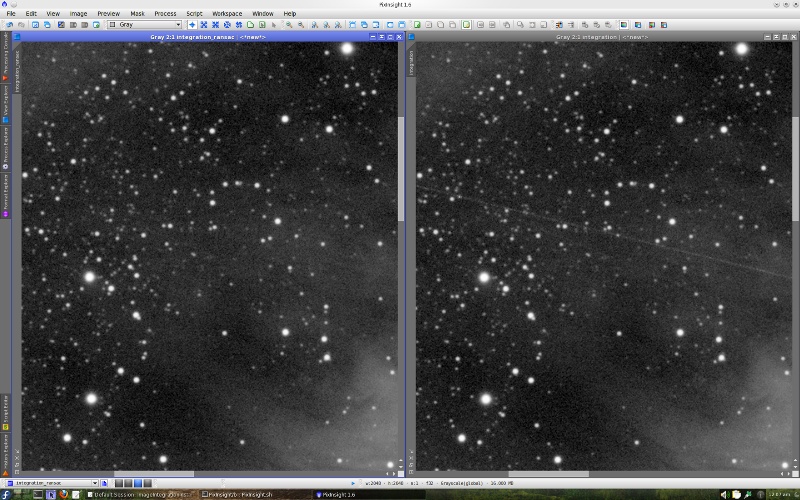

The next screenshot is a comparison between an integrated image (raw data courtesy of Oriol Lehmkuhl and Ivette Rodr?guez) with the new RANSAC fitting rejection (left) and without rejection (right). Both images integrate 10 frames with signal-to-noise improvements of 2.86 and 2.92, respectively. SNR degradation is very low with excellent outlier rejection properties.

Click on the image for a full size version

The new RANSAC linear fitting rejection requires at least five images. It is best suited for large sets of images (>= 8).

Sorry for the inconvenience.

Hi there,

The new version of the ImageIntegration tool that comes with PixInsight 1.6.1 implements a new pixel rejection algorithm: RANSAC linear fitting. This is the first of a series of improvements in this essential tool, which will be introduced during the 1.6.x cycle.

The random sample consensus (RANSAC) algorithm is already being used in PixInsight with great success. The StarAlignment tool implements a sophisticated RANSAC procedure as the final stage of its star matching engine. The main advantage of RANSAC is its robustness. In the case of the new rejection method implemented in ImageIntegration, RANSAC is used to fit the best possible straight line (in the twofold sense of minimizing average deviation and maximizing inliers) to the set of pixel values of each pixel stack. At the same time, all pixels that don't agree with the fitted line (to within user-defined tolerances) are considered as outliers and thus rejected to enter the final integrated image.

The new pixel rejection algorithm is controlled, as usual, with two dedicated threshold parameters in sigma units: RANSAC low and RANSAC high, respectively for low and high pixels. The screenshot below shows the new version of ImageIntegration and some results of the new rejection method.

Click on the image for a full size version

The next screenshot is a comparison between an integrated image (raw data courtesy of Oriol Lehmkuhl and Ivette Rodr?guez) with the new RANSAC fitting rejection (left) and without rejection (right). Both images integrate 10 frames with signal-to-noise improvements of 2.86 and 2.92, respectively. SNR degradation is very low with excellent outlier rejection properties.

Click on the image for a full size version

The new RANSAC linear fitting rejection requires at least five images. It is best suited for large sets of images (>= 8).