I've been accumulating data and after each night I run a draft integration with WBPP. I've noticed the result still has hints of airplane trails in them, despite an ever growing number of subs.

I just did a test and integrated by hand, using LN first (and got the same result), then turning off local normalization in favor of additive with scaling and scale + zero offset - everything else the same, and the trail disappeared in my sample (if you really squint you may see some vague hint of it, but largely gone). I was using ESD for rejection with defaults, but again, no change other than LN being turned off. There are 199 subs, so it's not a small sample, and I blinked carefully to ensure there was only one sub with a trail in this location. It's not the only one (and if I recall one of the others has 289 subs).

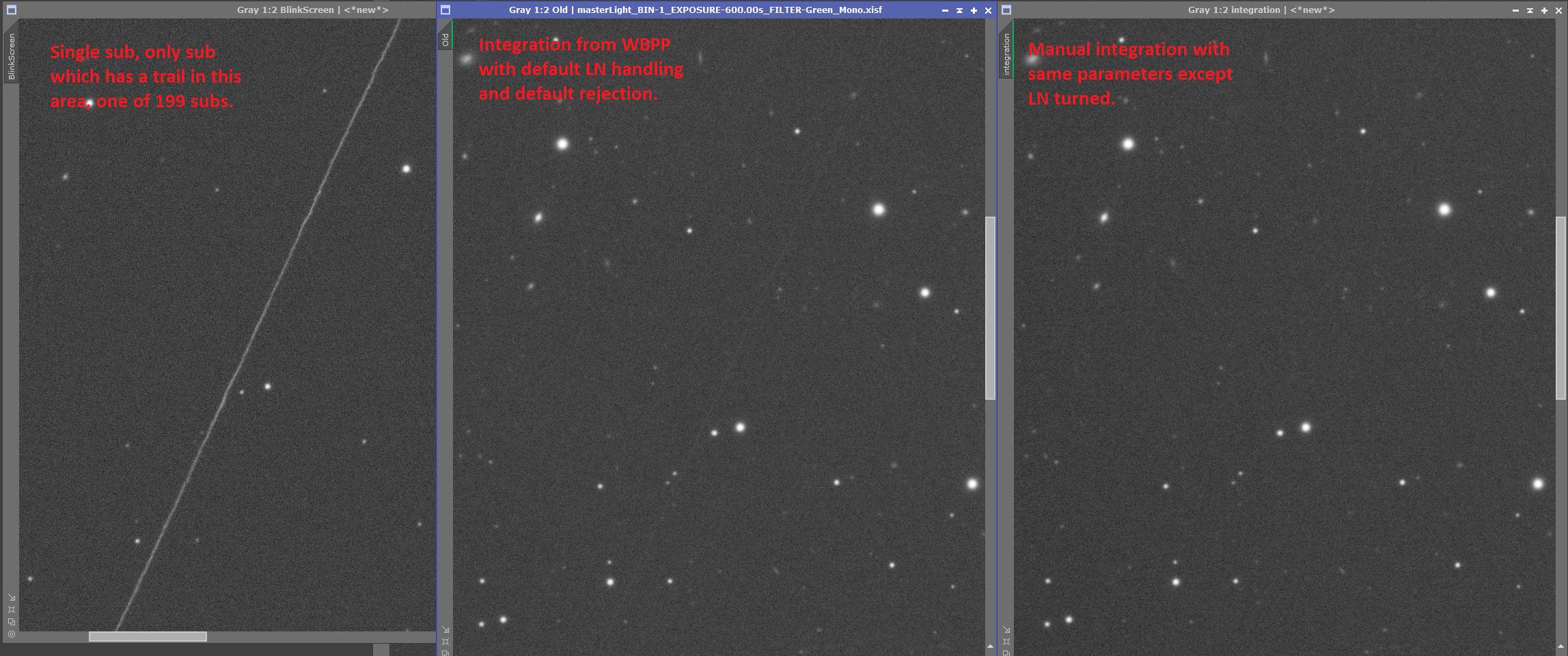

Here is an example at 1:2, hopefully it will preserve the size of the attachment so you can see it.

Should LN cause rejection to work more poorly? It's a good sized heavy trail, but not exactly what I would think was a problem, especially with only 1 of 199 with it?

Linwood

PS. This was PI LN files, nothing to do with NSG generated ones

I just did a test and integrated by hand, using LN first (and got the same result), then turning off local normalization in favor of additive with scaling and scale + zero offset - everything else the same, and the trail disappeared in my sample (if you really squint you may see some vague hint of it, but largely gone). I was using ESD for rejection with defaults, but again, no change other than LN being turned off. There are 199 subs, so it's not a small sample, and I blinked carefully to ensure there was only one sub with a trail in this location. It's not the only one (and if I recall one of the others has 289 subs).

Here is an example at 1:2, hopefully it will preserve the size of the attachment so you can see it.

Should LN cause rejection to work more poorly? It's a good sized heavy trail, but not exactly what I would think was a problem, especially with only 1 of 199 with it?

Linwood

PS. This was PI LN files, nothing to do with NSG generated ones