Hi All,

Per Adam's suggestion, I did a comparison between the Standard WBPP Integration process and the FastIntegration process to measure how fast it would complete and the resulting image quality.

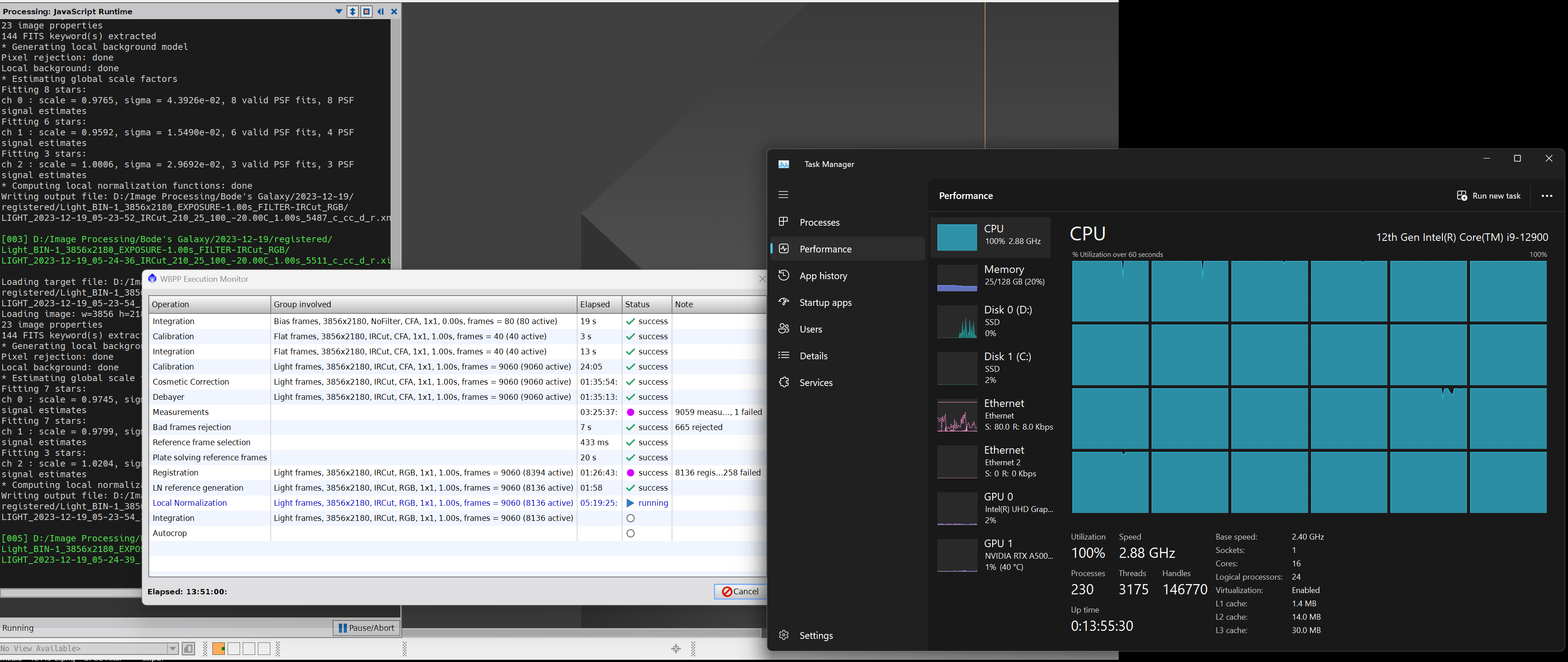

First of all for reference, here are the specs of my PI dedicated system:

Lenovo P360 running Windows 11 Pro

Intel Core i9 12900 with 16 cores (24 treads)

128GB of DDR5 RAM (Non-ECC)

OS Drive: 1TB NVMe SSD

Image Processing Drive: 2x 4TB WD Black SN850X NVMe PCI-E Gen4 running as an 8TB RAID 0 array (Transfers up to 16GB/s!)

All raw and resultant data is saved on my NAS as you can't trust a RAID 0 array but love it's speed!

Oh and a Nvidia RTX A5000 with 16GB of RAM but irrelevant here

The Standard Integration

The Standard Integration using WBPP well over 30 hours! Actually WBPP kept crashing on the ImageIntegration step as it was trying to load too way many rows of the 7738 remaining images to process after being rejected by the other processes. So it actually was 17 hours WBPP and another 13 hours doing a laborious process of integrating 1000 images at a time in 8 groups then integrating those into the final result. This is the RAW stretched image that was produced below. Keep in mind this was with local normalization and weighting enabled:

The FastIntegration process with the 9000 frames took about 1 hour and 30 minutes after the initial 3 hours to calibrate and demosaic the frames resulting in a total of about 4 hours and 30 minutes! Yes it skips the local normalization but with so many subs, it eventially averages out pretty well but what about the results? This is the RAW stretched image that was produced below:

What a single sub looks like!

A few things to unpack here... First of all the images look similar but actually look a little better on the FastIntegration version. Yes I need more data so I did collect another 8000 subs and hopefully more then I will make a post on how I use FastIntegration to process my many thousands of images to mitigate seeing and light pollution and show my final image of M81. Still the FI image is pretty good for being captured almost at the diffraction limit of the scope! I have since refined my calibration to remove more non-photon noise so the next image will look fantastic! FastIntegration will save me money in the long run with a lower electric bill and time as I saved 25 hours! The image below is why this PC is dedicated to PI. This is not a bad thing, this is by design to process as much and as quickly as possible! Also makes for a good space heater in the winter

Fast imaging is not for the faint of heart as it can quickly fill up your hard drive especially while processing but so far the results have been pretty amazing especially after deconvolution. I can't wait to show you guys the end result. Until then happy fast imaging!

A very sincere THANKS to the PixInsight team that made this possible!

Clear Skies!

Dave