Credibility can be diminished not when someone cheats, but when they don't provide enough proof (burden of proof).

And that is what Vicent did this time with his first post, just three jpegs and "have a go at it"... which is the post that caused me to warn him to be careful.

I disagree. I think Vicent provided a good amount of proof:

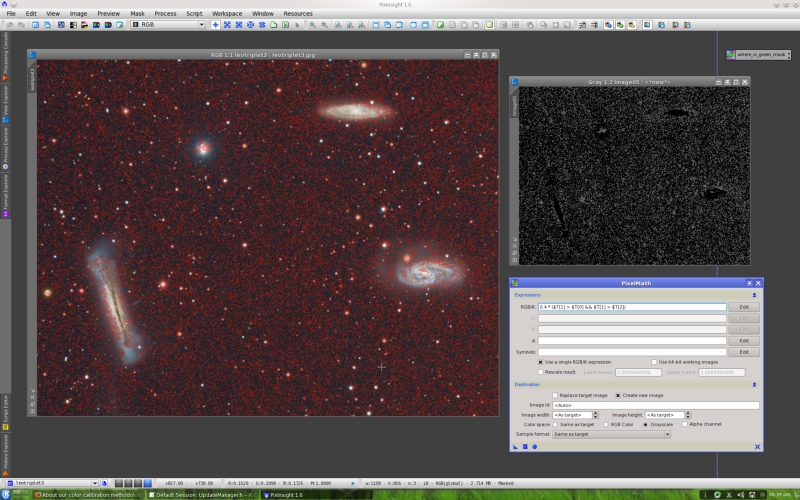

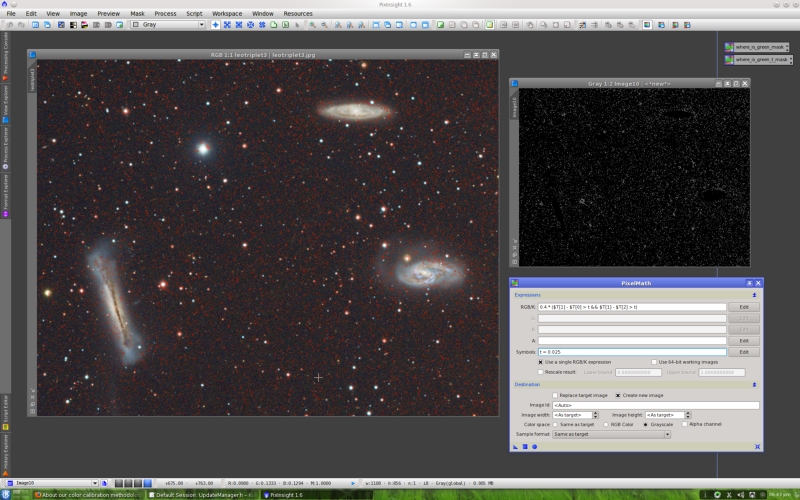

- He published the raw image, the image calibrated with eXcalibrator, and the image calibrated with PixInsight. These are not "just three jpegs"; they are stretched JPEG versions of the working images. Obviously, since the working images are linear ?because a color calibration procedure cannot be carried out with nonlinear images?, the JPEG versions have been stretched and slightly processed in order to evaluate the results; otherwise they would be almost black! We figured that both facts ?linearity of the data and the need to show stretched versions? are so obvious that it was unnecessary to comment on them. Of course, both resulting images received strictly the same treatment, except the color calibration step which is the target of the test, because otherwise the test would be invalid. That's completely out of question and again is so obvious that no explanations seemed necessary.

- Detailed numeric data about the eXcalibrator calibration: the number of stars used (12), the survey (Sloan), the dispersion in the results (0.02 in G and 0.03 in B), and the resulting weights (R=1, G=0.746 and B=0.682).

- Detailed data about the color calibration performed with ColorCalibration in PixInsight: the galaxy used as white reference (M66) and the resulting weights (R=1.055, G=1.1 and B=1).

We are used to work ?especially Vicent? in academic environments, so we really know something on how to make objective tests and comparisons. Many examples and tests published in professional academic scientific papers provide less data than what I've described above.

Of course one can ask for more explanations, detailed descriptions and more quantitative data (although in this case there are really no more quantitative data beyond the numbers that have already been published). Those requests are logical; I know Vicent is working on an elaborated and explanatory step-by-step example right now, including screenshots.

The quality and value of an objective test can be criticized or questioned based on objective technical criteria. It can also be discussed based on conceptual and philosophical considerations. However, what cannot happen ?should

never happen? is that the credibility of the author gets diminished because the JPEG versions of the resulting images are not as somebody would expect, or because they just "look weird". That is an unscientific attitude.

The talk-behind-the-back in this discipline (and so many others) sometimes is quite bad, unfortunately.

That's true but I am not interested in that. So case closed for me too.

lanckianLocus.png