Hi everybody,

Recently we have read some interesting discussions about color calibration on deep sky images. In some of these discussions we have seen how the tools that we have implemented in PixInsight, particularly the BackgroundNeutralization and ColorCalibration tools and their underlying methods and algorithms, have been analyzed on the basis of quite inaccurate descriptions and evaluations.

As this has been a recurring topic for a long time, I have decided to write a sticky post to clarify a few important facts about our deep sky color calibration methods and, in a broader way, about our philosophy of color representation in deep sky astrophotography.

In first place, let's describe how our two main color calibration tools work. Fortunately, both tools are now covered by the new PixInsight Reference Documentation, so we can link to the corresponding documents to save a lot of descriptions here:

Official documentation for the BackgroundNeutralization tool.

Official documentation for the ColorCalibration tool.

The documentation for ColorCalibration is still incomplete (it lacks some examples and figures), but it provides a sufficiently accurate description as to understand how it actually works.

As you can read on the above document, ColorCalibration can work basically in two different modes: range selection and structure detection. In range selection mode, ColorCalibration allows to select a whole nearby spiral galaxy as a white reference—not the nucleus of a galaxy, as has been said. This method has been devised by Vicent Peris, who has implemented and applied it to process a number of images acquired with large professional telescopes. Here are some images for which this calibration method has been applied strictly:

We are working—time permitting—on a formalization of this color calibration method, and Vicent is also working on a survey of reference calibration galaxies covering the entire sky. However as you know both my work load and Vicent's professional work are huge so don't expect this being published very soon.

What happens when there are no galaxies on the image? The above linked NGC 6914 image is a good example. In these cases we simply use the calibration factors computed for a reference galaxy acquired with the same instrument, and apply them to the image in question, taking into account the difference in atmospheric extinction between both images.

The other working mode of ColorCalibration—structure detection—can be used to select a large number of stars to compute a white reference. This is a local calibration method (valid only for the image being calibrated) that has many potential problems. For example, one must be sure that a sufficiently complete set of spectral types is being sampled, or the calibration factors can be strongly biased. When this method is implemented carefully, it can provide quite good results though. Here is an example:

Before ColorCalibration (working in structure detection mode):

After ColorCalibration:

Once we have seen how our tools actually work and how they can be used in practice, let's talk a little about the underlying color philosophy. Common wisdom on color calibration methods for deep sky images relies on considering a particular spectral type as the white reference. In particular, the G2V spectral type—the solar type—is normally used as the white reference. Our color calibration methods don't follow this idea.

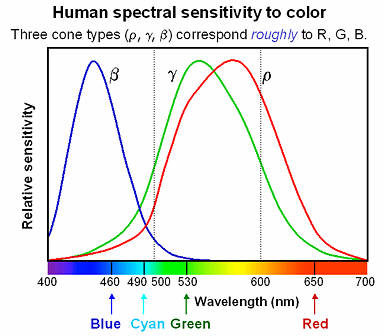

Why not rely on any particular spectral type for color calibration? Because we can't find any particularly good reason to use the apparent color of a star as a white reference for deep sky images. A G2V star is an ideal white reference for a daylight scene, in case we want to take the human vision system as the underlying definition of "true color". For example, a G2V star is a plausible white reference for a planetary image. The reason is that all objects in the solar system are either reflecting or radiating the sun's light. So if your image sensor has linear response to incident light and you apply a linear transformation to the image such that a G2V star is rendered as pure white, then the planetary scene will be rendered in true daylight color, or just as it would be seen directly by a human.

In a deep sky image however, no object, in general, is reflecting light from a G2V star. Deep sky images are definitely not daylight scenes, and most of the light that we capture and represent in them is far beyond the capabilities of the human vision system. We think that using a G2V star as a white reference for a deep sky image is a too anthropocentric view. We prefer to follow a completely different path, starting from the idea that no color can be taken as "real" in the deep sky, on a documentary basis. Instead of pursuing the illusion of real color, we try to apply a neutral criterion that pursues a very different goal: to represent a deep sky scene in an unbiased way regarding color, where no particular spectral type or color is being favored over others. In our opinion, this is the best way to provide a plausible color calibration criterion for deep sky astrophotography, both conceptually and physically.

In this way we try to design and implement what we call spectrum-agnostic or documentary calibration methods. These methods pursue maximizing information representation through color in an unbiased way. In Vicent's calibration method, we take the integrated light of a nearby spiral galaxy as white reference. A nearby spiral galaxy with negligible redshift and good viewing conditions as seen from Earth is a plausible documentary white reference because it provides an excellent sample of all stellar populations and spectral types. Each pixel acquired from a galaxy is actually the result of the mixture of light from a large number of different deep sky objects.

In its structure detection mode, the ColorCalibration tool can be used to sample a large number of stars of varying spectral types. This can also be a good documentary white reference—if properly applied—because by averaging a sufficiently representative sample of stars we are not favoring any particular color.

In some cases we have also implemented special variations of the galaxy calibration method. Vicent Peris processed one of the first deep fields pertaining to the ALHAMBRA survey, acquired with the 3.5 m telescope of Calar Alto Observatory:

This is the high-resolution image combining 14 of the 23 filters of ALHAMBRA, from 396 nm to 799 nm:

This image shows an extremely deep field. In fact, almost all of the objects that you can see in this image are galaxies, despite they may seem stars. This image poses a big problem in terms of color rendition due to two unique conditions: (1) it integrates light from 14 different filters and (2) the main goal with this image is maximizing representation of distances. Basically, it is very important to clearly differentiate between the most distant galaxies, represented as extremely red objects, and the relatively close ones, represented almost in what we can recognize as "true color", in terms of most "normal" galaxy images.

So instead of using a nearby spiral galaxy and applying the resulting calibration factors—which on the other hand is impossible in this case because no such image exists acquired with the same instrumentation—, the white reference was taken as the average of some of the largest (and hence closest) galaxies in the image. Even if these galaxies can have some significant redshift, the resulting white reference leads to a local calibration procedure that maximizes the representation of object distances through color, which is the main documentary goal of this image.

Recently we have read some interesting discussions about color calibration on deep sky images. In some of these discussions we have seen how the tools that we have implemented in PixInsight, particularly the BackgroundNeutralization and ColorCalibration tools and their underlying methods and algorithms, have been analyzed on the basis of quite inaccurate descriptions and evaluations.

As this has been a recurring topic for a long time, I have decided to write a sticky post to clarify a few important facts about our deep sky color calibration methods and, in a broader way, about our philosophy of color representation in deep sky astrophotography.

In first place, let's describe how our two main color calibration tools work. Fortunately, both tools are now covered by the new PixInsight Reference Documentation, so we can link to the corresponding documents to save a lot of descriptions here:

Official documentation for the BackgroundNeutralization tool.

Official documentation for the ColorCalibration tool.

The documentation for ColorCalibration is still incomplete (it lacks some examples and figures), but it provides a sufficiently accurate description as to understand how it actually works.

As you can read on the above document, ColorCalibration can work basically in two different modes: range selection and structure detection. In range selection mode, ColorCalibration allows to select a whole nearby spiral galaxy as a white reference—not the nucleus of a galaxy, as has been said. This method has been devised by Vicent Peris, who has implemented and applied it to process a number of images acquired with large professional telescopes. Here are some images for which this calibration method has been applied strictly:

We are working—time permitting—on a formalization of this color calibration method, and Vicent is also working on a survey of reference calibration galaxies covering the entire sky. However as you know both my work load and Vicent's professional work are huge so don't expect this being published very soon.

What happens when there are no galaxies on the image? The above linked NGC 6914 image is a good example. In these cases we simply use the calibration factors computed for a reference galaxy acquired with the same instrument, and apply them to the image in question, taking into account the difference in atmospheric extinction between both images.

The other working mode of ColorCalibration—structure detection—can be used to select a large number of stars to compute a white reference. This is a local calibration method (valid only for the image being calibrated) that has many potential problems. For example, one must be sure that a sufficiently complete set of spectral types is being sampled, or the calibration factors can be strongly biased. When this method is implemented carefully, it can provide quite good results though. Here is an example:

Before ColorCalibration (working in structure detection mode):

After ColorCalibration:

Once we have seen how our tools actually work and how they can be used in practice, let's talk a little about the underlying color philosophy. Common wisdom on color calibration methods for deep sky images relies on considering a particular spectral type as the white reference. In particular, the G2V spectral type—the solar type—is normally used as the white reference. Our color calibration methods don't follow this idea.

Why not rely on any particular spectral type for color calibration? Because we can't find any particularly good reason to use the apparent color of a star as a white reference for deep sky images. A G2V star is an ideal white reference for a daylight scene, in case we want to take the human vision system as the underlying definition of "true color". For example, a G2V star is a plausible white reference for a planetary image. The reason is that all objects in the solar system are either reflecting or radiating the sun's light. So if your image sensor has linear response to incident light and you apply a linear transformation to the image such that a G2V star is rendered as pure white, then the planetary scene will be rendered in true daylight color, or just as it would be seen directly by a human.

In a deep sky image however, no object, in general, is reflecting light from a G2V star. Deep sky images are definitely not daylight scenes, and most of the light that we capture and represent in them is far beyond the capabilities of the human vision system. We think that using a G2V star as a white reference for a deep sky image is a too anthropocentric view. We prefer to follow a completely different path, starting from the idea that no color can be taken as "real" in the deep sky, on a documentary basis. Instead of pursuing the illusion of real color, we try to apply a neutral criterion that pursues a very different goal: to represent a deep sky scene in an unbiased way regarding color, where no particular spectral type or color is being favored over others. In our opinion, this is the best way to provide a plausible color calibration criterion for deep sky astrophotography, both conceptually and physically.

In this way we try to design and implement what we call spectrum-agnostic or documentary calibration methods. These methods pursue maximizing information representation through color in an unbiased way. In Vicent's calibration method, we take the integrated light of a nearby spiral galaxy as white reference. A nearby spiral galaxy with negligible redshift and good viewing conditions as seen from Earth is a plausible documentary white reference because it provides an excellent sample of all stellar populations and spectral types. Each pixel acquired from a galaxy is actually the result of the mixture of light from a large number of different deep sky objects.

In its structure detection mode, the ColorCalibration tool can be used to sample a large number of stars of varying spectral types. This can also be a good documentary white reference—if properly applied—because by averaging a sufficiently representative sample of stars we are not favoring any particular color.

In some cases we have also implemented special variations of the galaxy calibration method. Vicent Peris processed one of the first deep fields pertaining to the ALHAMBRA survey, acquired with the 3.5 m telescope of Calar Alto Observatory:

This is the high-resolution image combining 14 of the 23 filters of ALHAMBRA, from 396 nm to 799 nm:

This image shows an extremely deep field. In fact, almost all of the objects that you can see in this image are galaxies, despite they may seem stars. This image poses a big problem in terms of color rendition due to two unique conditions: (1) it integrates light from 14 different filters and (2) the main goal with this image is maximizing representation of distances. Basically, it is very important to clearly differentiate between the most distant galaxies, represented as extremely red objects, and the relatively close ones, represented almost in what we can recognize as "true color", in terms of most "normal" galaxy images.

So instead of using a nearby spiral galaxy and applying the resulting calibration factors—which on the other hand is impossible in this case because no such image exists acquired with the same instrumentation—, the white reference was taken as the average of some of the largest (and hence closest) galaxies in the image. Even if these galaxies can have some significant redshift, the resulting white reference leads to a local calibration procedure that maximizes the representation of object distances through color, which is the main documentary goal of this image.