i'm wondering if anyone could shed some light on what i'm seeing here.

i've got 280 subframes each 10 second shots and i'm getting a strange result when i try to stack them.

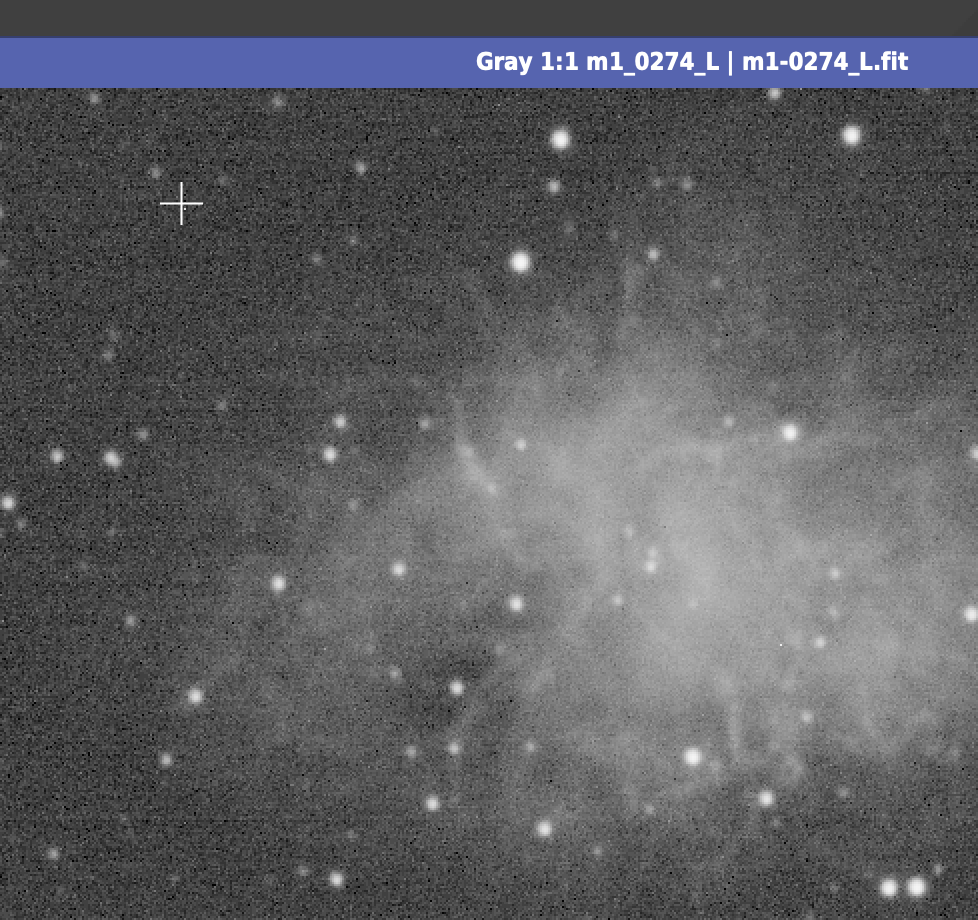

first, this is what a single frame looks like using STF:

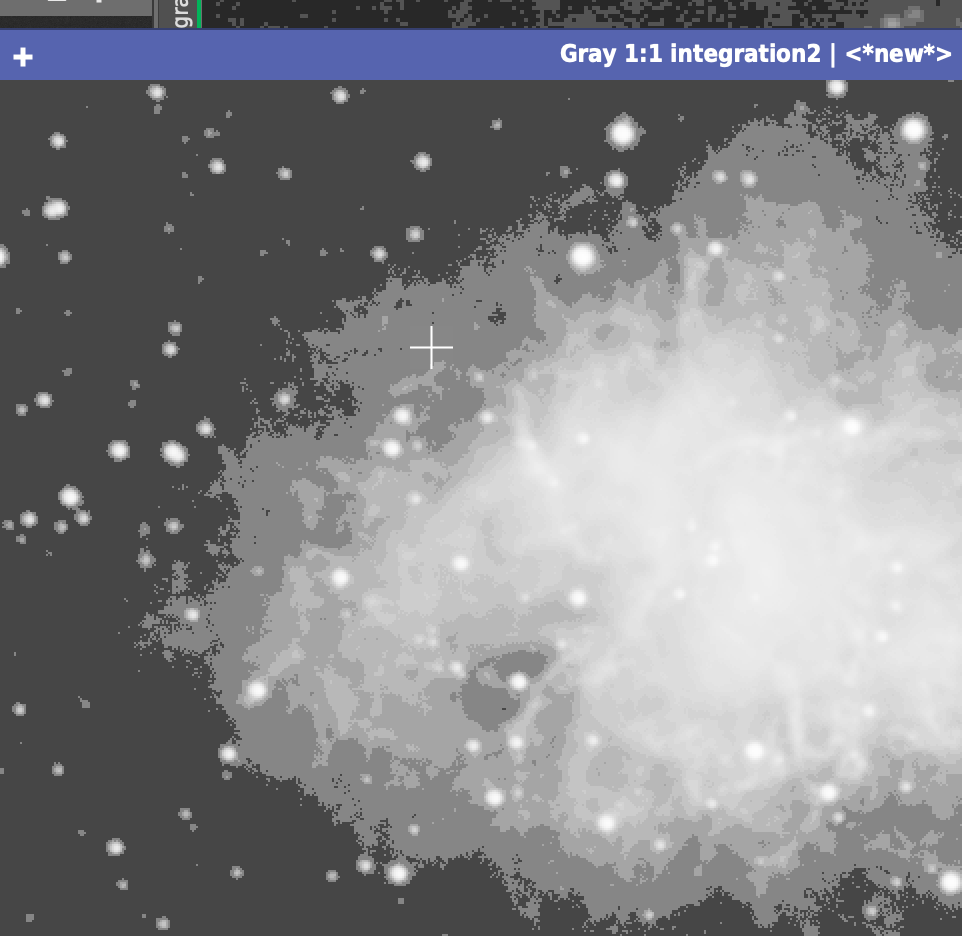

and stacking with all the default values (except linear fit clipping) ends up looking like this under STF:

i have another set of 280 subframes on a different target that yield similar results.

these subframes have only been registered - i wanted to keep things simple in trying to identify the problem, so i didn't calibrate them (but when i do calibrate them, similar bad results occur.)

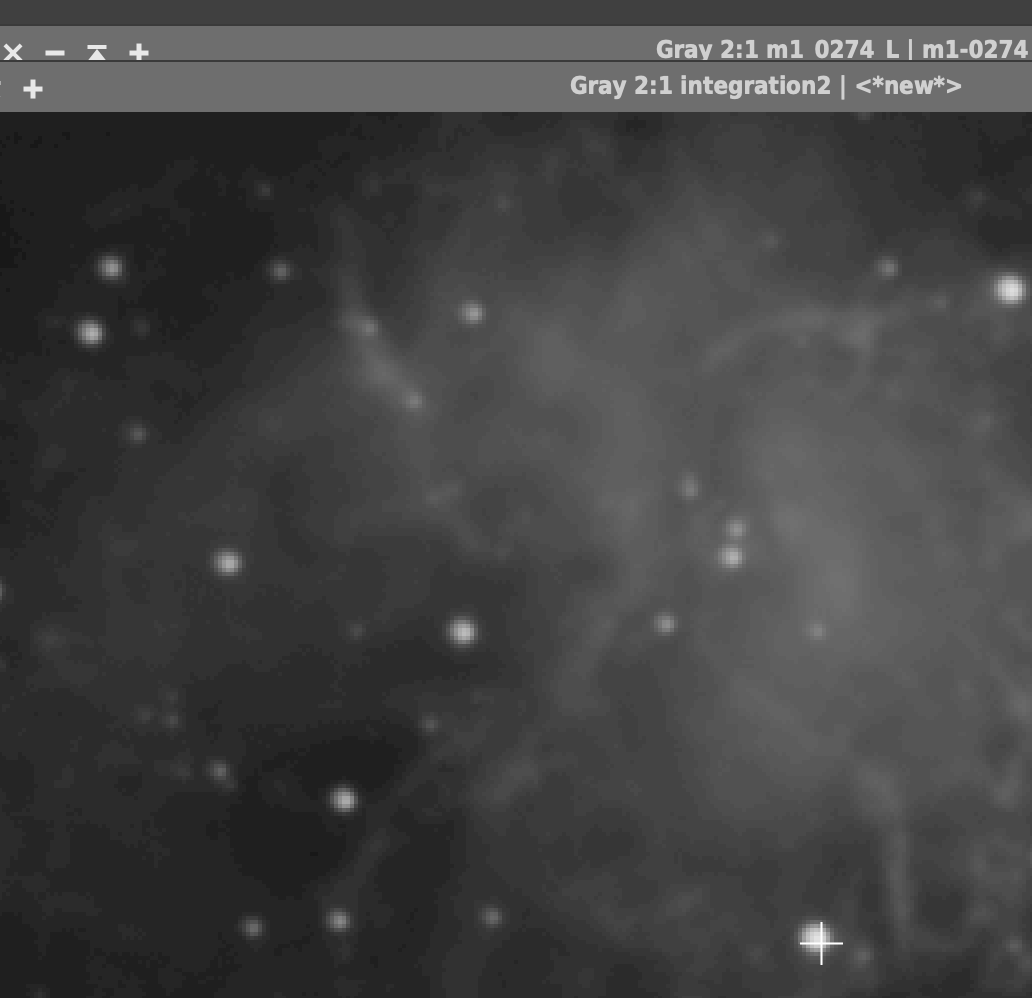

manually stretching the stacked result is very difficult, and it appears to be very dim image and it's difficult to get enough contrast to even see it without the pixilation showing up:

i would assume that i obviously need to increase my subframe exposure time from 10 seconds to something reasonable like 180, but in my mind i think "hey, what's the difference between 10 frames at 180 seconds vs. 180 frames at 10 seconds?

it just seems like a single frame actually looks better than a stack of 180 frames, which confuses me.

any insight that could be offered would be appreciated!

i've got 280 subframes each 10 second shots and i'm getting a strange result when i try to stack them.

first, this is what a single frame looks like using STF:

and stacking with all the default values (except linear fit clipping) ends up looking like this under STF:

i have another set of 280 subframes on a different target that yield similar results.

these subframes have only been registered - i wanted to keep things simple in trying to identify the problem, so i didn't calibrate them (but when i do calibrate them, similar bad results occur.)

manually stretching the stacked result is very difficult, and it appears to be very dim image and it's difficult to get enough contrast to even see it without the pixilation showing up:

i would assume that i obviously need to increase my subframe exposure time from 10 seconds to something reasonable like 180, but in my mind i think "hey, what's the difference between 10 frames at 180 seconds vs. 180 frames at 10 seconds?

it just seems like a single frame actually looks better than a stack of 180 frames, which confuses me.

any insight that could be offered would be appreciated!