Hello,

I've almost used up my trial period and although I'm (for now) only doing planetary imaging I really like PI, I think I'm going to keep it. I can do most of the things I do in other software with PI but I am missing one feature for which I still have to open up the buggy and old Registax, and that is RGB Balance. I am not speaking about stretching, that I know how to do but automatically align all RGB channels with a single click as in Registax.

I think it should be possible to do it with pixelmath, Gerald Wechselberger has a Tutorial on that which kinda goes in the right direction (align for blue):

R: $T - (med($T[0])-med($T[2]))

G: $T - (med($T[1])-med($T[2]))

B: $T

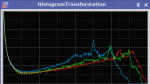

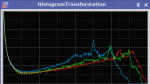

This changes the histogram from this:

To this - it only flattens the curves but they are not aligned:

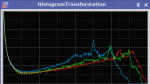

What I want to achieve is this:

Can anyone tell me how to do it?

Clear skies!

raphael

I've almost used up my trial period and although I'm (for now) only doing planetary imaging I really like PI, I think I'm going to keep it. I can do most of the things I do in other software with PI but I am missing one feature for which I still have to open up the buggy and old Registax, and that is RGB Balance. I am not speaking about stretching, that I know how to do but automatically align all RGB channels with a single click as in Registax.

I think it should be possible to do it with pixelmath, Gerald Wechselberger has a Tutorial on that which kinda goes in the right direction (align for blue):

R: $T - (med($T[0])-med($T[2]))

G: $T - (med($T[1])-med($T[2]))

B: $T

This changes the histogram from this:

To this - it only flattens the curves but they are not aligned:

What I want to achieve is this:

Can anyone tell me how to do it?

Clear skies!

raphael