There was a thread about "Preparing color-balanced OSC flats in PixInsight" https://www.cloudynights.com/topic/667524-preparing-color-balanced-osc-flats-in-pixinsight/

at the Cloudy Nights forum some time ago. Unfortunately no consensus was found and the thread came to nothing. Also the original poster did not answer my private messages lately. So I think that this topic is appropriate to be discussed here.

The main questions that were raised in the cited thread were:

1) How can we judge a mosaiced MasterFlat? (e.g. comparison of uncalibrated light frames with calibrated ones and with the MasterFlat)

2) Why does flat field correction of OSC data cause a very strong color cast?

3) How can the strong color cast be avoided?

4) Does the strong color cast after normal flat field correction of OSC data degrade the SNR of the images?

Appended is a typical MasterFlat of my ASI294MC Pro (gain 120, offset 30, -10 ?C, flat field box undimmed, 40 flat frames, exposure time 0.003 s; the flat frames were calibrated with a MasterFlatDark and integrated) and its histogram.

The bayer/mosaic pattern of the camera is RGGB, PixInsight's assignmnet of the CFA channels is:

1) Judgement of the Flat field correction / the MasterFlat

The MasterFlat cannot be judged from this representation. The reason is that the bayered representation does not show any dust donuts due to the large intensity difference of CFA1, CFA2 (green, strong) and CFA0, CFA3 (red and blue, weak). The application of a STF Auto Stretch worsens the situation, the result beeing dimmer and not more contrasty.

In the debayered MasterFlat dust donuts can be made out, but the image has a very strong green color cast even if the 'Link RGB channels' option of STF is disabled.

In order to see the dust donuts one has to split the data into CFA channels with SplitCFA. The separated channels can be judged with STF Auto Stretch. With the above MF the Statistics look as follows ('Unclipped' option of Statistics disabled):

2) Color cast caused by Flat field correction

Let us recall how the flat correction is performed with OSC data in PixInsight:

Cal = (LF - MD) / MF * s0

Cal: Calibrated light frame

LF: light frame

MD: MasterDark

MF: MasterFlat

s0: Master flat scaling factor = mean(MF)

For OSC data, Pixinsight uses one and the same s0 in the flat field correction for each channel. For the present MF, the overall factors for each channel are:

CFA0: s0/mean(MF_CFA0) = 1.44

CFA1: s0/mean(MF_CFA1) = 0.77

CFA2: s0/mean(MF_CFA2) = 0.77

CFA3: s0/mean(MF_CFA3) = 1.40

From these data it is evident that the flat field correction causes a very strong color cast to violet when one compares the original light frames with the calibrated ones. So whereas the uncalibrated light frames have a strong green color cast, the calibrates ones have a very strong red color cast.

3) Modified MasterFlat

Actually the flat field correction should not use one s0 for all CFA channels but the mean of the particular channel for each channel separately. One can achieve this by modifying the original MF. Note that multiplying channels of a MF with a factor is an allowed operation provided that

- no rescale is performed and

- no clipping of data occurs.

So I set up a PixelMath expression that does the modification of the MF in the desired way, i.e. multiply each CFA channel with the mean of the channel with lowest signal (in this case CFA0) divided by the mean of the particular channel:

RGB/K: iif(x()%2==0 && y()%2==0, $T, iif(x()%2==0 && y()%2!=0, m0/m1*$T, iif(x()%2!=0 && y()%2==0, m0/m2*$T, m0/m3*$T)))

Symbols: m0=mean(MF_CFA0), m1=mean(MF_CFA1), m2=mean(MF_CFA2), m3=mean(MF_CFA3)

Important: the 'Rescale result' option must be disabled!

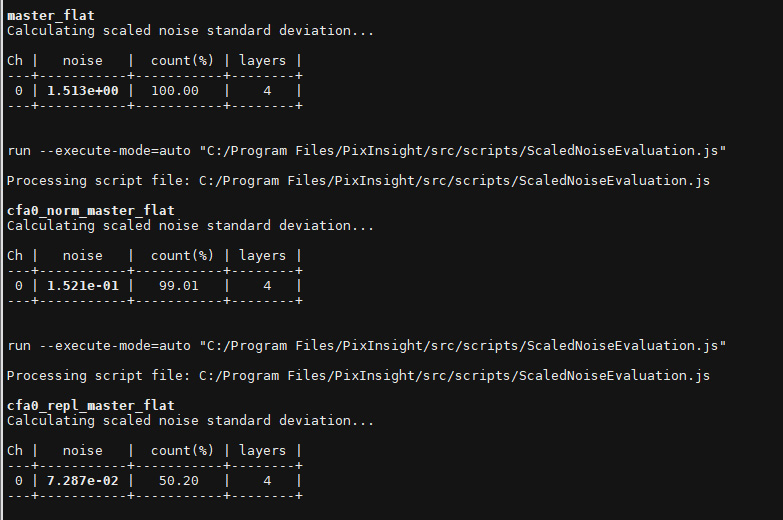

The statistics of the MF modified in this way are as follows:

This modified MF (as monochrome representation of the CFA data) can be used conveniently for the judgment of the flat field correction because different illumination in the channels is balanced. the debayered modidified MF does not show a strong color cast. There was, however, a slight color gradient visible: left side greenish, right side reddish. The modified MF does not cause a color cast when used for the flat field correction.

4) Influence on SNR

The most important question was whether the SNR of the end result is influenced by applying a normal MF (with different illumination of channels) to OSC data. In the Cloudy Night thread the assumption of some people was that the not modified MF would degrade SNR during integration (if I understood it correctly). I did not find such an effect when I compared an integration of light frames calibrated with either MF and would like to hear the arguments of the experts.

Bernd

at the Cloudy Nights forum some time ago. Unfortunately no consensus was found and the thread came to nothing. Also the original poster did not answer my private messages lately. So I think that this topic is appropriate to be discussed here.

The main questions that were raised in the cited thread were:

1) How can we judge a mosaiced MasterFlat? (e.g. comparison of uncalibrated light frames with calibrated ones and with the MasterFlat)

2) Why does flat field correction of OSC data cause a very strong color cast?

3) How can the strong color cast be avoided?

4) Does the strong color cast after normal flat field correction of OSC data degrade the SNR of the images?

Appended is a typical MasterFlat of my ASI294MC Pro (gain 120, offset 30, -10 ?C, flat field box undimmed, 40 flat frames, exposure time 0.003 s; the flat frames were calibrated with a MasterFlatDark and integrated) and its histogram.

The bayer/mosaic pattern of the camera is RGGB, PixInsight's assignmnet of the CFA channels is:

Code:

0 2 R G

1 3 G B1) Judgement of the Flat field correction / the MasterFlat

The MasterFlat cannot be judged from this representation. The reason is that the bayered representation does not show any dust donuts due to the large intensity difference of CFA1, CFA2 (green, strong) and CFA0, CFA3 (red and blue, weak). The application of a STF Auto Stretch worsens the situation, the result beeing dimmer and not more contrasty.

In the debayered MasterFlat dust donuts can be made out, but the image has a very strong green color cast even if the 'Link RGB channels' option of STF is disabled.

In order to see the dust donuts one has to split the data into CFA channels with SplitCFA. The separated channels can be judged with STF Auto Stretch. With the above MF the Statistics look as follows ('Unclipped' option of Statistics disabled):

Code:

MF MF_CFA0 MF_CFA1 MF_CFA2 MF_CFA3

count (%) 100.00000 100.00000 100.00000 100.00000 100.00000

count (px) 11694368 2923592 2923592 2923592 2923592

mean 27879.936 19409.632 36101.452 36089.172 19919.488

median 32367.932 19356.783 36005.927 35992.244 19846.627

variance 68851118.203 641136.407 2022116.605 2014070.630 627450.905

stdDev 8297.657 800.710 1422.011 1419.180 792.118

avgDev 10296.331 843.728 1490.886 1487.439 827.184

MAD 15201.454 937.441 1648.974 1644.057 910.120

minimum 17190.099 17190.099 32367.932 32534.088 17866.335

maximum 39768.546 21401.955 39748.476 39768.546 22114.6922) Color cast caused by Flat field correction

Let us recall how the flat correction is performed with OSC data in PixInsight:

Cal = (LF - MD) / MF * s0

Cal: Calibrated light frame

LF: light frame

MD: MasterDark

MF: MasterFlat

s0: Master flat scaling factor = mean(MF)

For OSC data, Pixinsight uses one and the same s0 in the flat field correction for each channel. For the present MF, the overall factors for each channel are:

CFA0: s0/mean(MF_CFA0) = 1.44

CFA1: s0/mean(MF_CFA1) = 0.77

CFA2: s0/mean(MF_CFA2) = 0.77

CFA3: s0/mean(MF_CFA3) = 1.40

From these data it is evident that the flat field correction causes a very strong color cast to violet when one compares the original light frames with the calibrated ones. So whereas the uncalibrated light frames have a strong green color cast, the calibrates ones have a very strong red color cast.

3) Modified MasterFlat

Actually the flat field correction should not use one s0 for all CFA channels but the mean of the particular channel for each channel separately. One can achieve this by modifying the original MF. Note that multiplying channels of a MF with a factor is an allowed operation provided that

- no rescale is performed and

- no clipping of data occurs.

So I set up a PixelMath expression that does the modification of the MF in the desired way, i.e. multiply each CFA channel with the mean of the channel with lowest signal (in this case CFA0) divided by the mean of the particular channel:

RGB/K: iif(x()%2==0 && y()%2==0, $T, iif(x()%2==0 && y()%2!=0, m0/m1*$T, iif(x()%2!=0 && y()%2==0, m0/m2*$T, m0/m3*$T)))

Symbols: m0=mean(MF_CFA0), m1=mean(MF_CFA1), m2=mean(MF_CFA2), m3=mean(MF_CFA3)

Important: the 'Rescale result' option must be disabled!

The statistics of the MF modified in this way are as follows:

Code:

MF_mod_CFA0 MF_mod_CFA1 MF_mod_CFA2 MF_mod_CFA3

count (%) 100.00000 100.00000 100.00000 100.00000

count (px) 2923592 2923592 2923592 2923592

mean 19409.632 19409.632 19409.632 19409.632

median 19356.783 19358.273 19357.501 19338.635

variance 641136.407 584509.319 582579.815 595741.680

stdDev 800.710 764.532 763.269 771.843

avgDev 843.728 801.562 799.981 806.011

MAD 937.441 886.555 884.216 886.825

minimum 17190.099 17402.336 17497.622 17409.031

maximum 21401.955 21370.422 21388.488 21548.648This modified MF (as monochrome representation of the CFA data) can be used conveniently for the judgment of the flat field correction because different illumination in the channels is balanced. the debayered modidified MF does not show a strong color cast. There was, however, a slight color gradient visible: left side greenish, right side reddish. The modified MF does not cause a color cast when used for the flat field correction.

4) Influence on SNR

The most important question was whether the SNR of the end result is influenced by applying a normal MF (with different illumination of channels) to OSC data. In the Cloudy Night thread the assumption of some people was that the not modified MF would degrade SNR during integration (if I understood it correctly). I did not find such an effect when I compared an integration of light frames calibrated with either MF and would like to hear the arguments of the experts.

Bernd