PixInsight 1.6.1 introduces a new image calibration tool: DefectMap. This tool allows you to fix bad pixels (e.g. hot and cold pixels) by replacing them with appropriate values computed from neighbor (valid) pixels. DefectMap has been authored by PTeam member Carlos Milovic, and has been released as an open-source tool pertaining to the standard PixInsight ImageCalibration module, under GPL v3 license. The full source code of the ImageCalibration module is included in all PCL standard distributions.

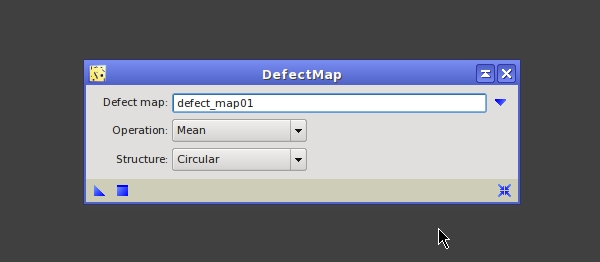

DefectMap provides three working parameters:

Defect map

This is the identifier of a view that will be used as the defect map of the process. In a defect map, black (zero) pixels correspond to bad or defective pixels. Bad pixels will be replaced with new values computed from neighbor pixels. When using convolution replacement operations (Gaussian, mean), non-zero pixel values represent the pixel weights that will be used for convolution. Morphological replacement operations (minimum, maximum, median) always use binarized (0/1) map values.

Operation

Bad pixel replacement operation. This parameter specifies the convolution or morphological operation used to replace bad pixels with new pixels computed from neighbor values. Mean and Gaussian will be applied by convolution; minimum, maximum and median will be applied as morphological operators.

Structure

This parameter specifies the shape of a structural element used to apply a convolution or morphological transformation for bad pixel replacement. The structural element defines which neighbor pixels will be used to compute replacement values.

DefectMap provides three working parameters:

Defect map

This is the identifier of a view that will be used as the defect map of the process. In a defect map, black (zero) pixels correspond to bad or defective pixels. Bad pixels will be replaced with new values computed from neighbor pixels. When using convolution replacement operations (Gaussian, mean), non-zero pixel values represent the pixel weights that will be used for convolution. Morphological replacement operations (minimum, maximum, median) always use binarized (0/1) map values.

Operation

Bad pixel replacement operation. This parameter specifies the convolution or morphological operation used to replace bad pixels with new pixels computed from neighbor values. Mean and Gaussian will be applied by convolution; minimum, maximum and median will be applied as morphological operators.

Structure

This parameter specifies the shape of a structural element used to apply a convolution or morphological transformation for bad pixel replacement. The structural element defines which neighbor pixels will be used to compute replacement values.