Hi everybody,

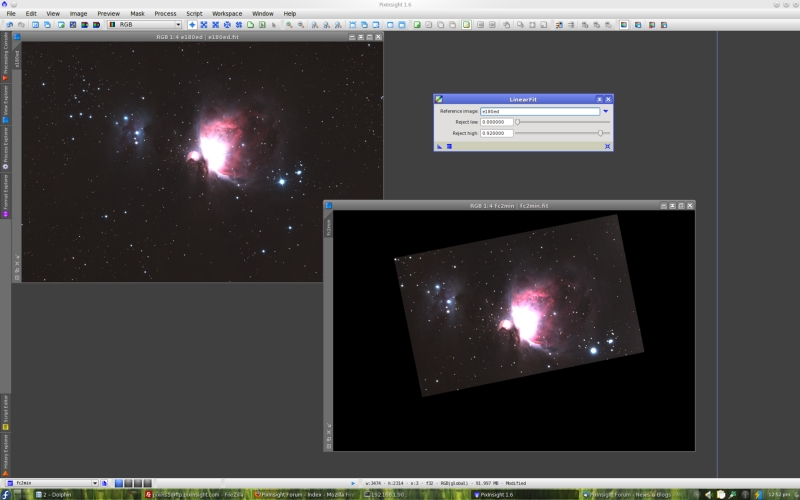

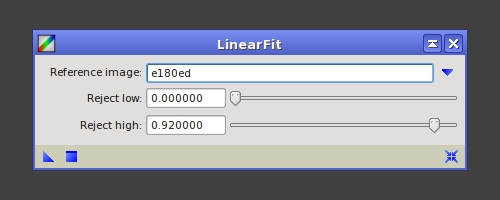

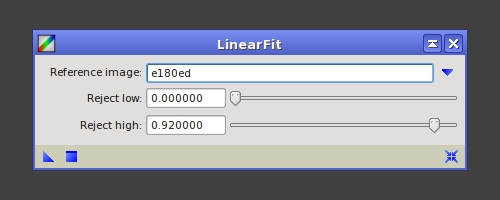

I have just announced the availability of a new standard tool: LinearFit. The interface of LinearFit is rather simple, as shown below.

Despite the simple interface the process is quite sophisticated and delivers great results, as you'll see in the example below.

LinearFit takes three parameters: a reference image and the two boundaries of a sampling interval in the normalized [0,1] range. When you apply LinearFit to a target image, it computes a set of linear fitting functions (one for each channel) and applies them to match mean background and signal levels in the target image to those of the reference image. Fitting functions are computed from the sets of pixels whose values pertain to the sampling interval. For a pixel v to be used, it must satisfy:

where rlow and rhigh are, respectively, the low and high rejection limits.

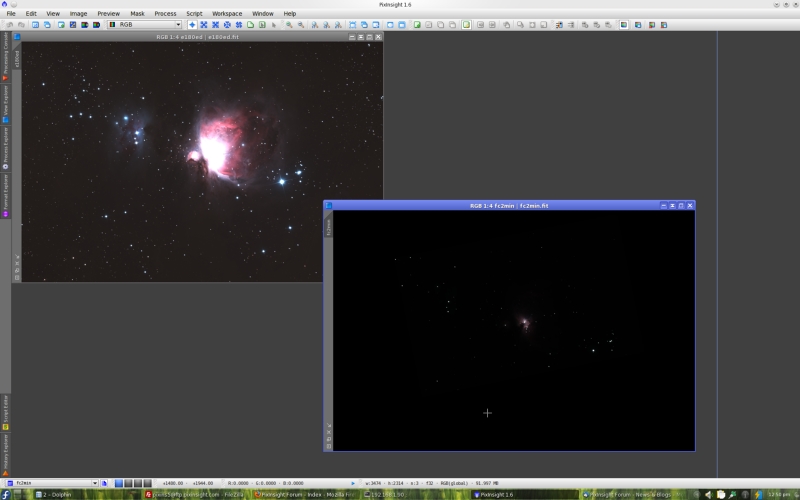

Below you can see an example. The image to the left is a single 10-minute shot of the M42 region taken with a Takahashi Epsilon 180ED telescope, 18 cm of aperture at f/2.8. The image to the right is a single 2-minute shot with a Takahashi FS102 refractor, 10 cm at f/8. Both images have been acquired with a modified Canon 300D DSLR camera. Both are raw linear images shown without any STF applied.

Now this is the result after applying LinearFit to match the second image to the first:

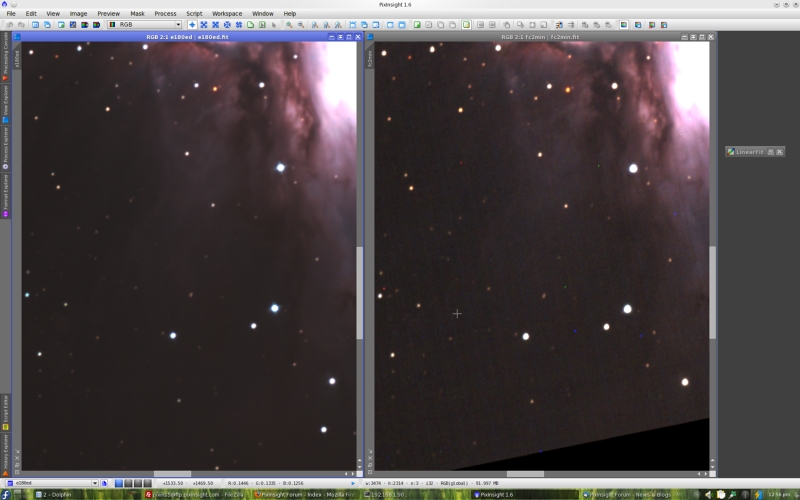

Note how the second, short-exposure image has been accurately adapted to match the 180ED long exposure. Naturally, the short exposure has a very low SNR compared to the long one. In fact, this is a nice example to show practically the true meaning of some key concepts, such as signal-to-noise ratio as a function of exposure and field illumination, and image scaling. This is shown, along with the accuracy of the fit, in the comparison below.

LinearFit computes a linear fitting function of the form:

y = a + b*x

The coefficients a and b are calculated using a robust algorithm that minimizes average absolute deviation of the fitted line with respect to the images being matched.

Both the reference and target images must be previously registered. This is necessary because if the images are not aligned, there is no correlation between the two sets of sampled pixels, and the fitted function will in general be wrong or meaningless. You know that this happens when the b scaling coefficient is close to zero or negative, but eventually you can get an apparently reasonable fitting function for dissimilar images.

LinearFit writes some useful information on the console. For example, this is the output generated when I applied LinearFit with the images shown on the screenshot above:

Along with the fitting functions (y = a + b?x), LinearFit informs you about the achieved average absolute deviation (sigma values) and the percentage of pixels that have been sampled to compute the fitting coefficients. The lower sigma the better, as a low sigma means that the fitted line closely represents the set of sampled pixel value pairs. The number of sampled pixels depends exclusively on the sampling interval and on the overlapped region between both images. The example shown here is quite extreme; we know this from the relatively large b coefficients (about 67, 64 and 78 respectively for the red, green and blue channels).

I have just announced the availability of a new standard tool: LinearFit. The interface of LinearFit is rather simple, as shown below.

Despite the simple interface the process is quite sophisticated and delivers great results, as you'll see in the example below.

LinearFit takes three parameters: a reference image and the two boundaries of a sampling interval in the normalized [0,1] range. When you apply LinearFit to a target image, it computes a set of linear fitting functions (one for each channel) and applies them to match mean background and signal levels in the target image to those of the reference image. Fitting functions are computed from the sets of pixels whose values pertain to the sampling interval. For a pixel v to be used, it must satisfy:

rlow < v < rhigh

where rlow and rhigh are, respectively, the low and high rejection limits.

Below you can see an example. The image to the left is a single 10-minute shot of the M42 region taken with a Takahashi Epsilon 180ED telescope, 18 cm of aperture at f/2.8. The image to the right is a single 2-minute shot with a Takahashi FS102 refractor, 10 cm at f/8. Both images have been acquired with a modified Canon 300D DSLR camera. Both are raw linear images shown without any STF applied.

Now this is the result after applying LinearFit to match the second image to the first:

Note how the second, short-exposure image has been accurately adapted to match the 180ED long exposure. Naturally, the short exposure has a very low SNR compared to the long one. In fact, this is a nice example to show practically the true meaning of some key concepts, such as signal-to-noise ratio as a function of exposure and field illumination, and image scaling. This is shown, along with the accuracy of the fit, in the comparison below.

LinearFit computes a linear fitting function of the form:

y = a + b*x

The coefficients a and b are calculated using a robust algorithm that minimizes average absolute deviation of the fitted line with respect to the images being matched.

Both the reference and target images must be previously registered. This is necessary because if the images are not aligned, there is no correlation between the two sets of sampled pixels, and the fitted function will in general be wrong or meaningless. You know that this happens when the b scaling coefficient is close to zero or negative, but eventually you can get an apparently reasonable fitting function for dissimilar images.

LinearFit writes some useful information on the console. For example, this is the output generated when I applied LinearFit with the images shown on the screenshot above:

Code:

LinearFit: Processing view: fc2min

Writing swap files...

902.01 MB/s

Sampling interval: ]0.000000,0.920000[

Fitting images: done.

Linear fit functions:

y0 = -0.086368 + 67.236738?x0

?0 = +0.017761

N0 = 35.57% (2859272)

y1 = -0.142312 + 64.684015?x1

?1 = +0.014826

N1 = 36.08% (2900658)

y2 = -0.188776 + 78.253923?x2

?2 = +0.016941

N2 = 35.82% (2879668)Along with the fitting functions (y = a + b?x), LinearFit informs you about the achieved average absolute deviation (sigma values) and the percentage of pixels that have been sampled to compute the fitting coefficients. The lower sigma the better, as a low sigma means that the fitted line closely represents the set of sampled pixel value pairs. The number of sampled pixels depends exclusively on the sampling interval and on the overlapped region between both images. The example shown here is quite extreme; we know this from the relatively large b coefficients (about 67, 64 and 78 respectively for the red, green and blue channels).