Hi all,

A new standard tool is now available for all supported platforms: HDRComposition.

HDRComposition allows you to integrate a series of images of the same subject with varying exposures into a single high dynamic range composite image. HDRComposition implements basically the same algorithm originally created by Vicent Peris, and already implemented as a script two years ago by Oriol Lehmkuhl. However, this new tool implements a completely different image scaling algorithm (also published as the new LinearFit tool), much more accurate and robust, and works in a much more automatic way. In fact, you normally will only need to adjust one parameter, and most times you'll get an excellent result just with the default parameter values.

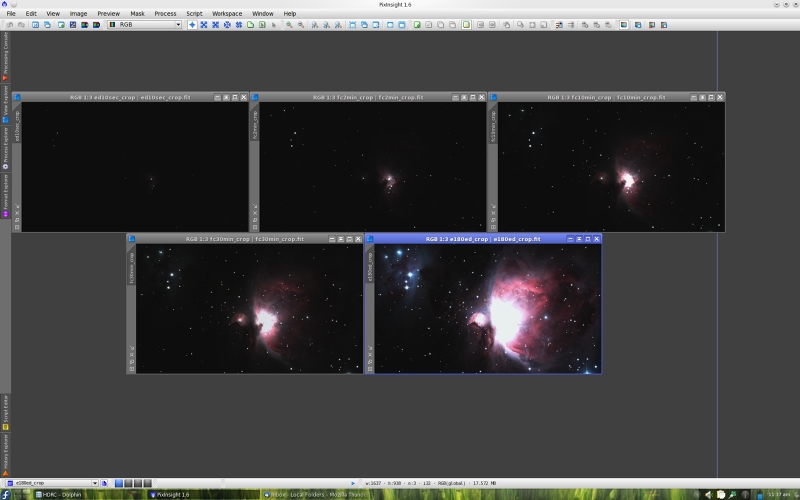

To give you an idea of how HDRComposition works, I've prepared a small example with a set of five Images of the M42 region by Vicent Peris and Jos? Luis Lamadrid. You can see them on the screenshot below.

These are raw linear images acquired with a modified Canon 300D DSLR camera through three different instruments: A Takahashi FC-100 refractor (10 cm @ f/8), a Celestron 80ED (8 cm @ f/7.5), and a Takahashi Epsilon 180ED telescope (18 cm @ f/2.8). The exposures are of 10 seconds with the 80ED; 2, 10 and 30 minutes with the FC-100, and 10 minutes with the Epsilon 180ED, all of them from a very dark location in Teruel, Spain.

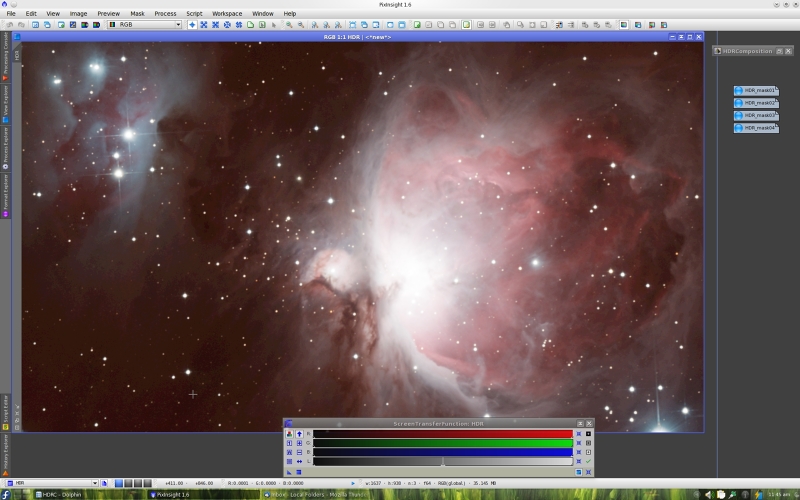

Our goal is to integrate all of these exposures into a high dynamic range image. Here is the result after running HDRComposition with default parameters:

Note that the set of source images can be specified in any arbitrary order; HDRComposition automatically computes the relative exposure of each image and sorts the list accordingly. This routine is very robust, and works reliably without requiring any metadata information about exposure times, ISO speed, CCD parameters, etc. (HDRComposition ignores image metadata). In the rare case you need to specify the exposure order manually (which should not happen in any situation we can figure out), you can disable the Automatic exposure evaluation option.

The resulting HDR image is extremely dark, as expected. It is a linear image with a huge dynamic range (the whole range of the M42/M43 region) stored in a numeric range of at least 230 discrete sample values. Note that this HDR image does not fit into a 32-bit floating point image, where you can store a maximum of about 224 discrete values. This HDR image requires either the 32-bit unsigned integer format or the 64-bit floating point format, both available in PixInsight. The HDRComposition tool generates 64-bit floating point images by default, although 32-bit floating point can also be used as an option suitable for moderately large HDR compositions.

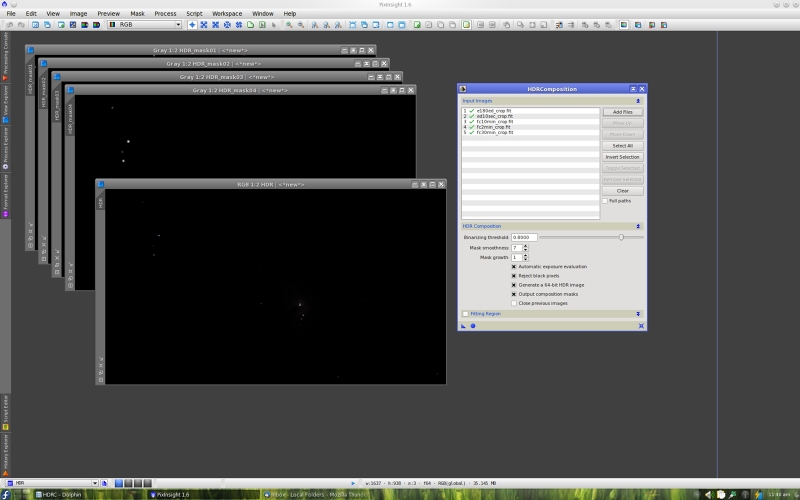

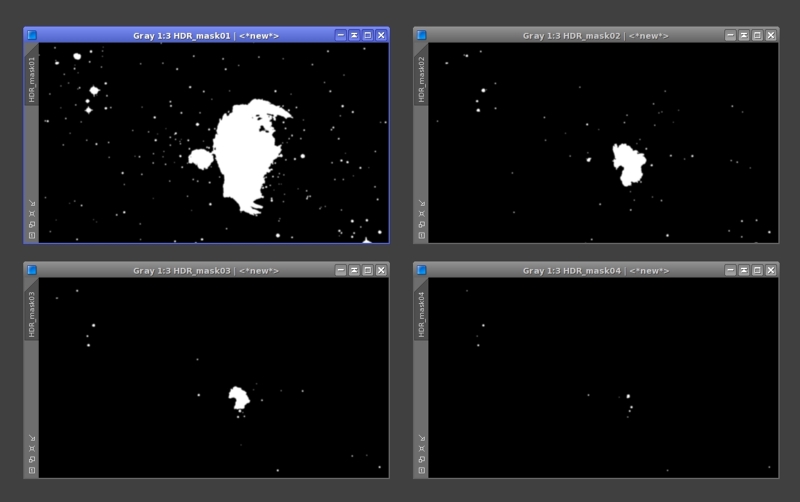

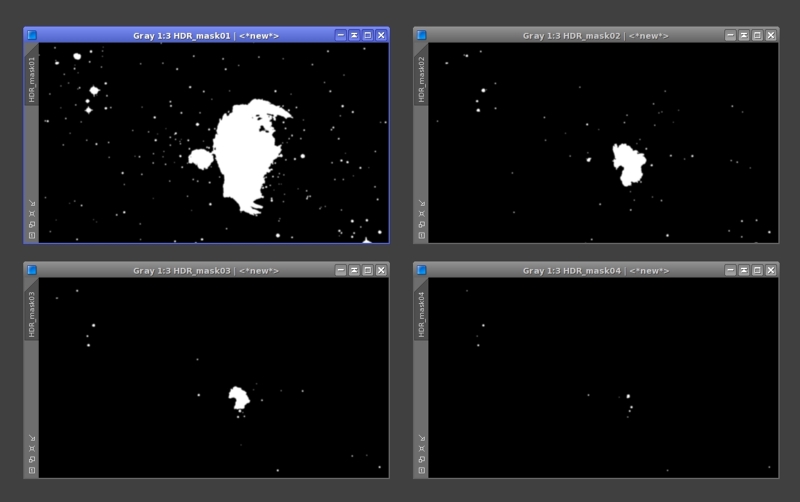

HDRComposition also provides, as an option, the set of composition masks used internally. Each composition mask is white where the pixels on a long exposure have been replaced with the corresponding pixels from the next exposure in decreasing exposure order. You can see the four masks generated in this example on the screenshot below.

Composition masks provide you full control over the process. Composition masks are initially generated as binary images (only 0 and 1 values). The Binarizing threshold parameter defines where to cut long exposures to replace their pixels with pixels from the next image in decreasing order of exposure. Normally the default value of 0.8 is quite appropriate, but you can change it if you see that the masks are not driving the composition correctly (which is rare). After binarization, the masks are smoothed to prevent artifacts generated by hard masking transitions. The degree of low-pass filtering applied to composition masks can be controlled with the Mask smoothness parameter, although the default value will usually give excellent results.

Here is the HDR image with an automatic STF applied. STFs are 16-bit look-up tables in PixInsight. Note that a 16-bit LUT causes posterization in the screen representation of this image. There is no surprise here, since as noted this is a 64-bit floating point image storing more than 230 discrete values.

Below is the image after BackgroundNeutralization. Note the tiny upper bound of the background sampling range: 0.0001.

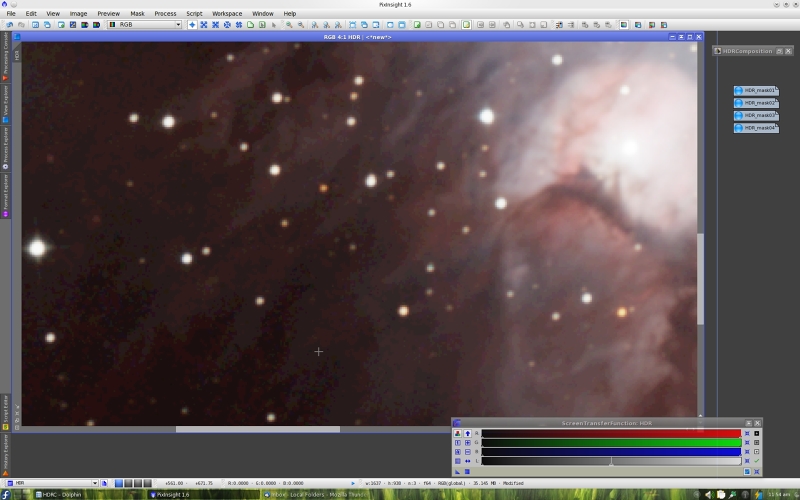

To better evaluate the numerical depth of this linear HDR image, here is a zoomed area with automatic STF:

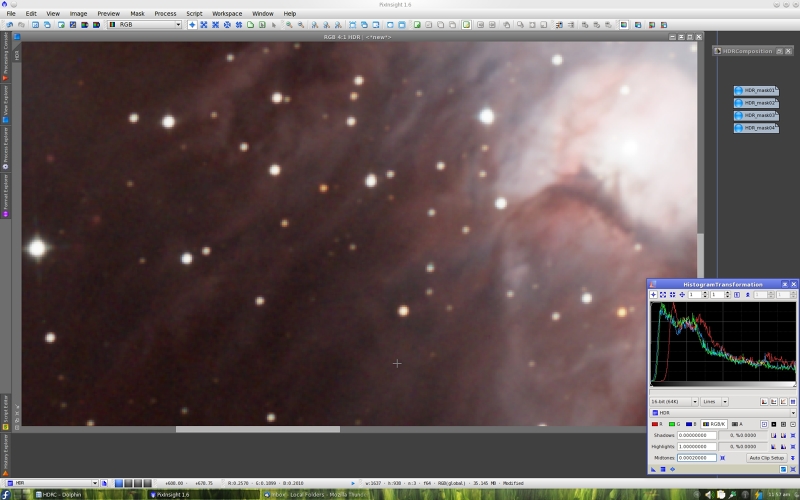

Note the strong posterization caused by the 16-bit rendition of the image (please click the image to see the full-size version). Now you can see and show a clear example of what happens when 16 bits aren't sufficient. Actually, 32-bit floating point doesn't suffice in this case, either. This is the same area after stretching the 64-bit image with HistogramTransformation:

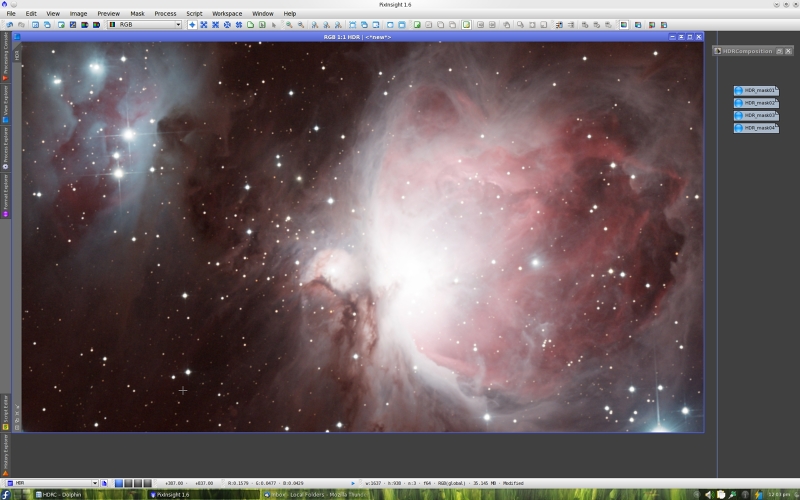

And this is the whole stretched image:

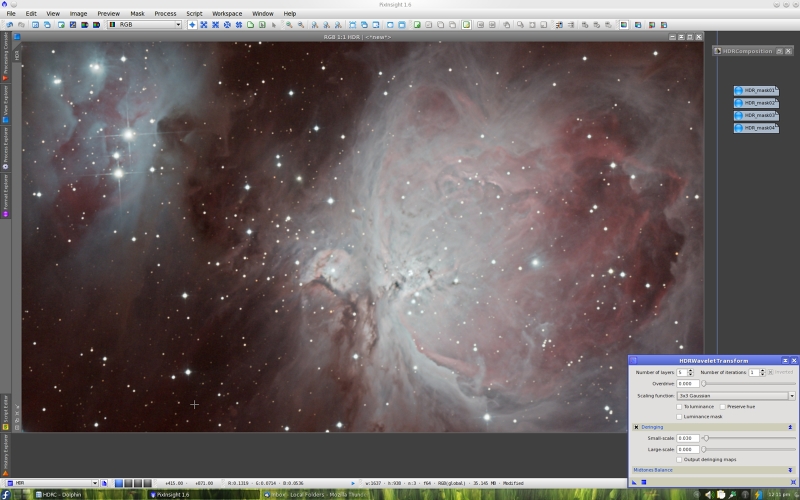

After stretching, the standard procedure in PixInsight is applying HDRWaveletTransform to compress the dynamic range of the HDR image. This is the result:

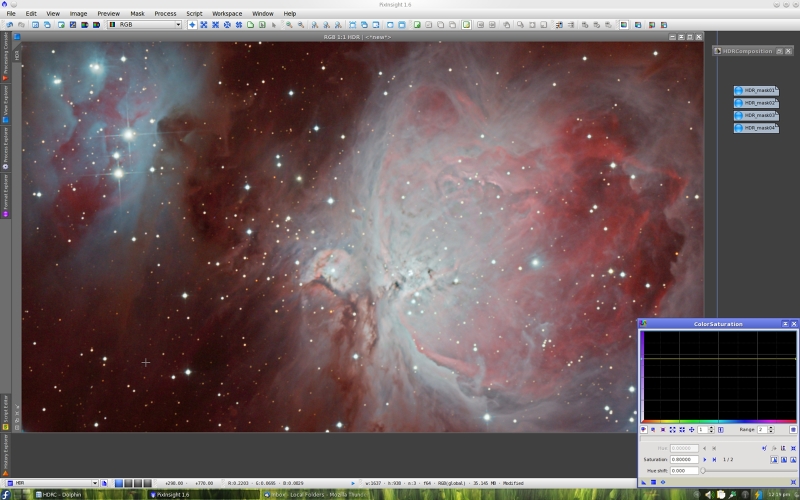

And finally, the image greatly benefits from a generous increase in color saturation. Note the chromatic richness in the following screenshot.

Summarizing:

- The best results are obtained when HDRComposition is used with linear images.

- For astronomical purposes, all the images must be accurately calibrated. You normally will calibrate, register and integrate a set of individual frames for each exposure, then the results of these integrations will feed HDRComposition. However, you can also use single calibrated frames, as this example demonstrates.

- HDRComposition requires registered images. In other words, pixel-per-pixel correlation is required for all the images taking part in the composition; dissimilar images cannot be used.

- Additive gradients, if they are dissimilar across the set of integrated images, will hurt the accuracy of the HDR composition process. If possible, all additive gradients should be removed prior to HDR composition. This involves applying the DBE or ABE tool to the linear images.

- To speed up the process, you can define a sampling region of interest (ROI). This is a rectangular area used to restrict image scaling. This has the additional advantage that if there are slight flat fielding errors near the edges of the images (which is frequent), one can define a central ROI to improve accuracy of image scaling.

That's it. Happy HDR compositing!

A new standard tool is now available for all supported platforms: HDRComposition.

HDRComposition allows you to integrate a series of images of the same subject with varying exposures into a single high dynamic range composite image. HDRComposition implements basically the same algorithm originally created by Vicent Peris, and already implemented as a script two years ago by Oriol Lehmkuhl. However, this new tool implements a completely different image scaling algorithm (also published as the new LinearFit tool), much more accurate and robust, and works in a much more automatic way. In fact, you normally will only need to adjust one parameter, and most times you'll get an excellent result just with the default parameter values.

To give you an idea of how HDRComposition works, I've prepared a small example with a set of five Images of the M42 region by Vicent Peris and Jos? Luis Lamadrid. You can see them on the screenshot below.

These are raw linear images acquired with a modified Canon 300D DSLR camera through three different instruments: A Takahashi FC-100 refractor (10 cm @ f/8), a Celestron 80ED (8 cm @ f/7.5), and a Takahashi Epsilon 180ED telescope (18 cm @ f/2.8). The exposures are of 10 seconds with the 80ED; 2, 10 and 30 minutes with the FC-100, and 10 minutes with the Epsilon 180ED, all of them from a very dark location in Teruel, Spain.

Our goal is to integrate all of these exposures into a high dynamic range image. Here is the result after running HDRComposition with default parameters:

Note that the set of source images can be specified in any arbitrary order; HDRComposition automatically computes the relative exposure of each image and sorts the list accordingly. This routine is very robust, and works reliably without requiring any metadata information about exposure times, ISO speed, CCD parameters, etc. (HDRComposition ignores image metadata). In the rare case you need to specify the exposure order manually (which should not happen in any situation we can figure out), you can disable the Automatic exposure evaluation option.

The resulting HDR image is extremely dark, as expected. It is a linear image with a huge dynamic range (the whole range of the M42/M43 region) stored in a numeric range of at least 230 discrete sample values. Note that this HDR image does not fit into a 32-bit floating point image, where you can store a maximum of about 224 discrete values. This HDR image requires either the 32-bit unsigned integer format or the 64-bit floating point format, both available in PixInsight. The HDRComposition tool generates 64-bit floating point images by default, although 32-bit floating point can also be used as an option suitable for moderately large HDR compositions.

HDRComposition also provides, as an option, the set of composition masks used internally. Each composition mask is white where the pixels on a long exposure have been replaced with the corresponding pixels from the next exposure in decreasing exposure order. You can see the four masks generated in this example on the screenshot below.

Composition masks provide you full control over the process. Composition masks are initially generated as binary images (only 0 and 1 values). The Binarizing threshold parameter defines where to cut long exposures to replace their pixels with pixels from the next image in decreasing order of exposure. Normally the default value of 0.8 is quite appropriate, but you can change it if you see that the masks are not driving the composition correctly (which is rare). After binarization, the masks are smoothed to prevent artifacts generated by hard masking transitions. The degree of low-pass filtering applied to composition masks can be controlled with the Mask smoothness parameter, although the default value will usually give excellent results.

Here is the HDR image with an automatic STF applied. STFs are 16-bit look-up tables in PixInsight. Note that a 16-bit LUT causes posterization in the screen representation of this image. There is no surprise here, since as noted this is a 64-bit floating point image storing more than 230 discrete values.

Below is the image after BackgroundNeutralization. Note the tiny upper bound of the background sampling range: 0.0001.

To better evaluate the numerical depth of this linear HDR image, here is a zoomed area with automatic STF:

Note the strong posterization caused by the 16-bit rendition of the image (please click the image to see the full-size version). Now you can see and show a clear example of what happens when 16 bits aren't sufficient. Actually, 32-bit floating point doesn't suffice in this case, either. This is the same area after stretching the 64-bit image with HistogramTransformation:

And this is the whole stretched image:

After stretching, the standard procedure in PixInsight is applying HDRWaveletTransform to compress the dynamic range of the HDR image. This is the result:

And finally, the image greatly benefits from a generous increase in color saturation. Note the chromatic richness in the following screenshot.

Summarizing:

- The best results are obtained when HDRComposition is used with linear images.

- For astronomical purposes, all the images must be accurately calibrated. You normally will calibrate, register and integrate a set of individual frames for each exposure, then the results of these integrations will feed HDRComposition. However, you can also use single calibrated frames, as this example demonstrates.

- HDRComposition requires registered images. In other words, pixel-per-pixel correlation is required for all the images taking part in the composition; dissimilar images cannot be used.

- Additive gradients, if they are dissimilar across the set of integrated images, will hurt the accuracy of the HDR composition process. If possible, all additive gradients should be removed prior to HDR composition. This involves applying the DBE or ABE tool to the linear images.

- To speed up the process, you can define a sampling region of interest (ROI). This is a rectangular area used to restrict image scaling. This has the additional advantage that if there are slight flat fielding errors near the edges of the images (which is frequent), one can define a central ROI to improve accuracy of image scaling.

That's it. Happy HDR compositing!