GaryP

Well-known member

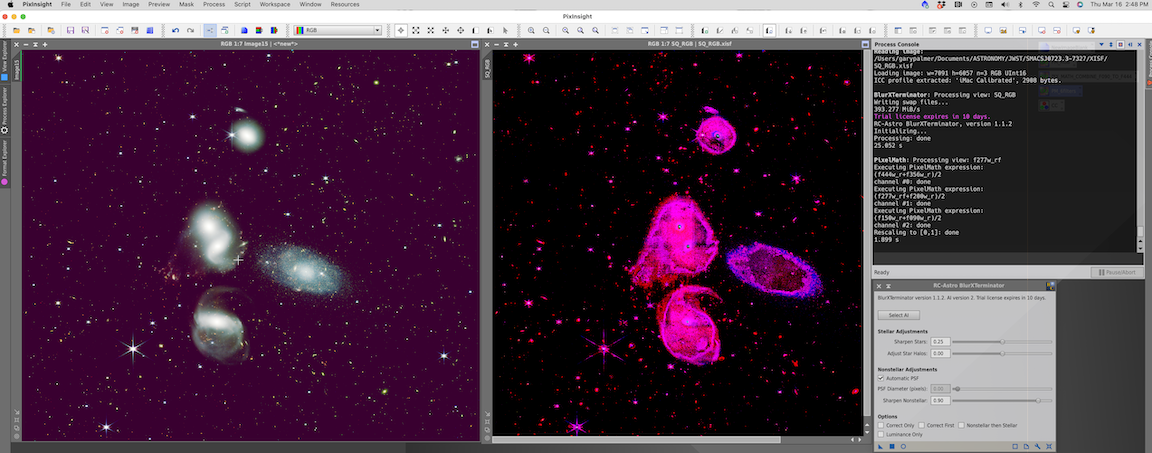

I have another 10 days on my free trial of BlurExterminator. Time is short and it's running out. I need to find out how well it works. Six JWST files of Stephan’s Quintet were combined in PixelMath in the usual way in order of wavelength, grouped into three pairs with R = (f444 + f356), G = (f277 + f200)/2, and B = (f150+f090)/2. The result was the view labeled “Image15” in the screen shot. Then BlurExterminator was applied with the default values. The result was the second view from the right which appears to be hopelessly solarized. One can see that there is no STF applied, but the usual STF just aggravates the problem. I was unable to make any constructive changes to the view with STF or HT. I am wondering why this happened and how to do better.

The RGB file prior to the application of BlurExterminator is here: Image15

The RGB file prior to the application of BlurExterminator is here: Image15

Last edited: