ALHAMBRA Survey: RGB Image Synthesis

Tutorial by Vicent Peris (PTeam/OAUV/CAHA) and Juan Conejero (PTeam)

Introduction

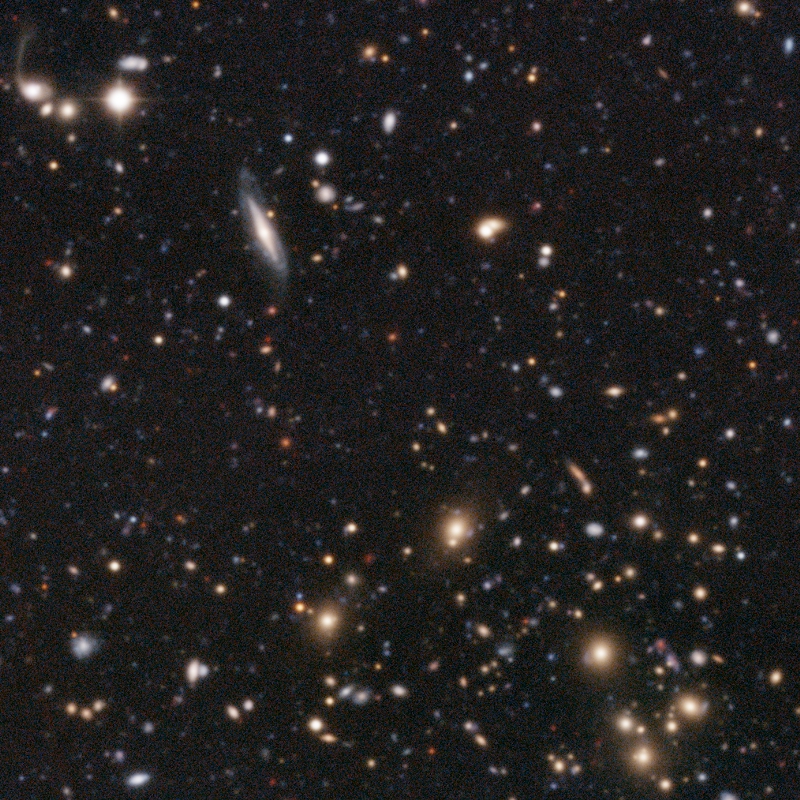

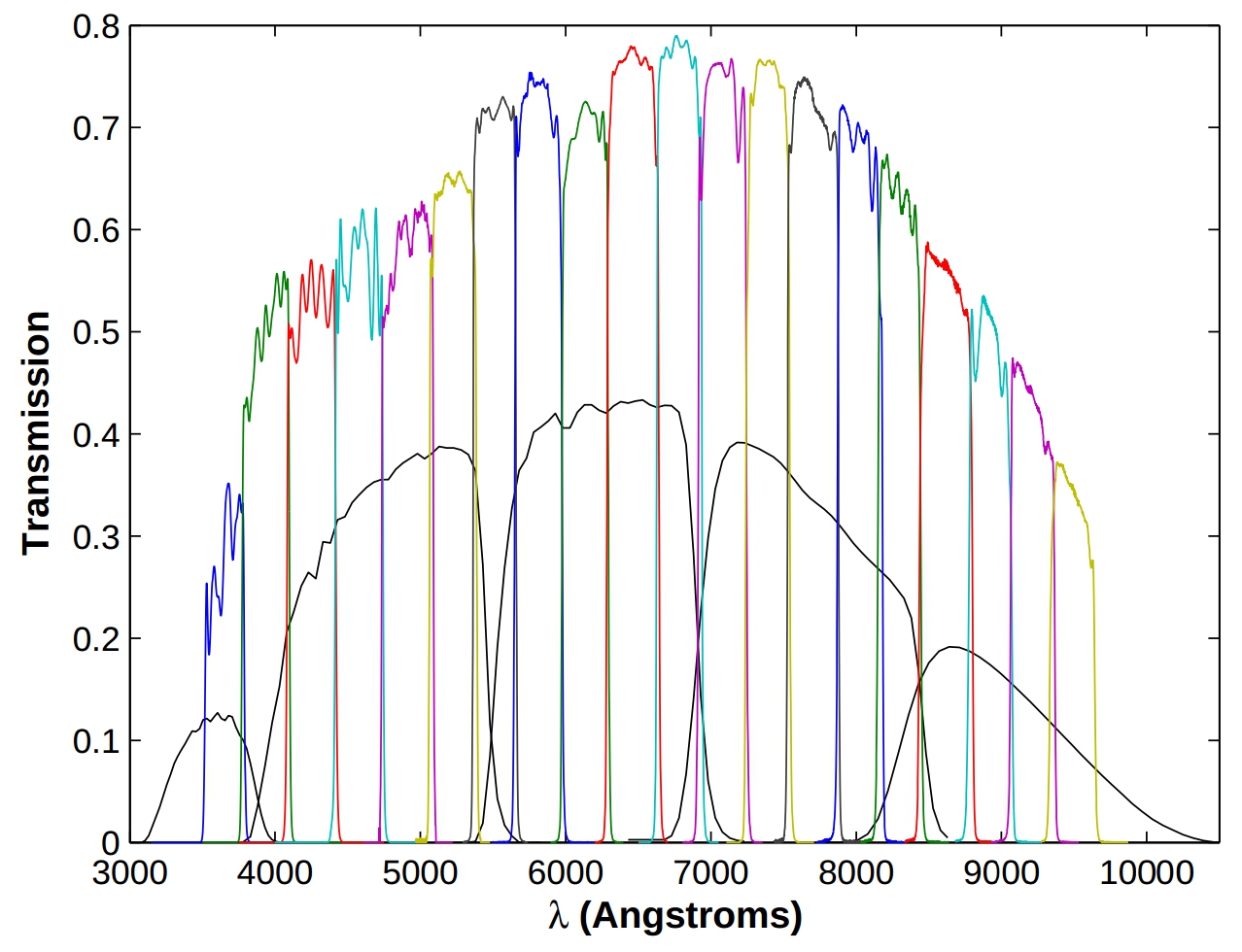

The Advanced Large, Homogeneous Area Medium Band Redshift Astronomical Survey (ALHAMBRA) is a photometric survey primarily intended for cosmic evolution studies. It covers a total area of four square degrees in the sky distributed in eight different fields (with half a square degree per field) with 20 contiguous, equal width, medium band photometric filters from 3500 Å to 9700 Å, plus the standard broad bands J, H, K in the near infrared. ALHAMBRA contains about 600,000 galaxies down to magnitude 26.5. The data have been acquired with the 3.5 meter Zeiss telescope at Calar Alto Observatory in Southern Spain.

With 23 filters, ALHAMBRA survey data pose a difficult problem for RGB image synthesis. In the first part of this tutorial we are going to describe the methodology that Vicent Peris, astrophotographer of the ALHAMBRA survey project, has designed for color calibration of RGB images generated from ALHAMBRA data. In the second part of the tutorial we'll see an implementation of this methodology using the PixInsight JavaScript runtime (PJSR). Two PJSR scripts allow us to automate the whole task in PixInsight: find and apply color calibration factors, compute the weights of each ALHAMBRA image for individual RGB filters, and generate the final RGB images.

Figure 1— The ALHAMBRA filter set with superimposed Sloan Digital Sky Survey filter system.

Towards a New Philosophy of Color

The philosophy of color currently adopted by the PixInsight platform was developed by Vicent Peris in 2010, while he was designing a color calibration system for generation of RGB images from ALHAMBRA survey data. ALHAMBRA survey images are thus crucial to understand our color calibration methodology and its implementation in PixInsight.

The art of astrophotography is all about giving an aesthetic meaning to physical phenomena through images. One of the main misunderstandings in astrophotography is to think that the human visual system is the reference for color meaning. This leads to an anthropocentric vision of the images due to the preconceived idea that nature is just how our eyes can perceive it. This misunderstanding is even more evident in the case of the ALHAMBRA survey, where a large number of objects only emit in the infrared because of their intrinsic color or high redshifts.

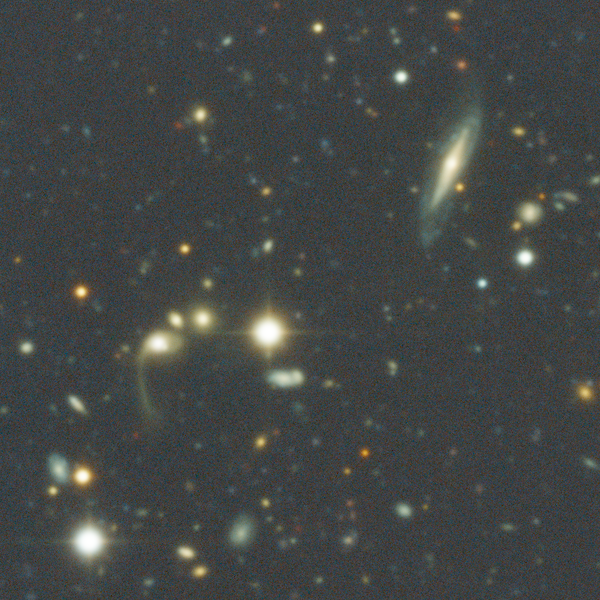

Mouse over:

Photon-calibrated color image with infrared data

Photon-calibrated color image without infrared data

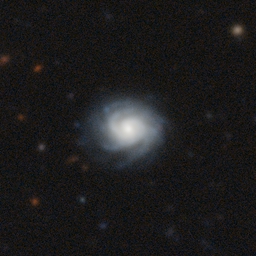

Figure 2— These two images show why it is very important to include the infrared filters in the color composition of ALHAMBRA data. The objects that appear in the color image with extended range into the infrared are mainly galaxies that don't emit in the visible range because of their intrinsic color or high redshifts. These two images are photon-calibrated, which means that the same amount of photon flux produces the same signal level in every filter used to assemble the composition.

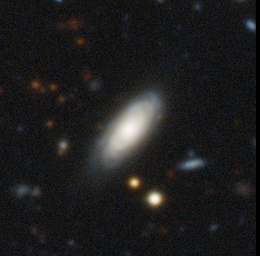

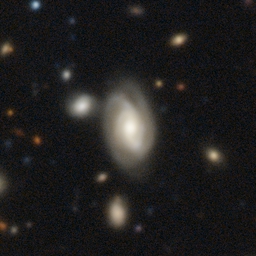

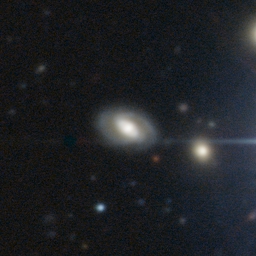

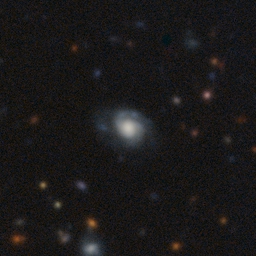

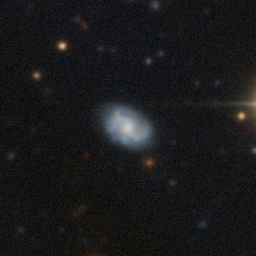

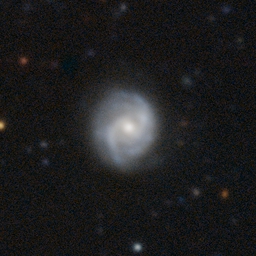

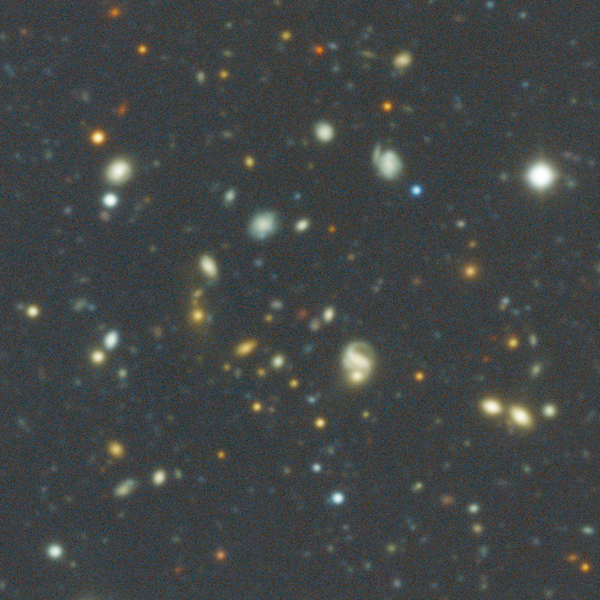

We find a visual meaning in these images when we stop relying on our eye's sensitivity, and start basing the color representation on the physical properties of the objects photographed. In this way color in the image has a meaning by itself. After extending the color representation into the infrared range, the tonal representation has to be maximized. To achieve this goal, our white reference must have also a documentary value in the images. In this case, the decision was to take as white reference the light of a set of face-on spiral galaxies throughout the whole spectrum range of the images. This enhances the differences in color among stellar populations because there is a good representation of all of them in these galaxies. For this purpose, Juan Conejero wrote a JavaScript script that did the photometry of seven galaxies in ALHAMBRA Field 4 (Figure 3).

Figure 3— The seven face-on galaxies used to calibrate the white reference of the ALHAMBRA survey.

Each of the 23 filters are multiplied by a factor calculated by this script, so the average intensity of these galaxies is the same in all filters. These factors define the white reference for the whole survey and will be applied to all ALHAMBRA fields.

At this very specific point is where the scientific survey acquires a meaning through our eyes. Our extended range vision of these images, tuned with the color of the spiral galaxies, gives as a result a photograph where we can see the different types of galaxies and the expansion of the Universe.

Figure 4— Our color calibration methodology yields a result where the main physical processes of the scene have a visual meaning.

The initial versions of the visual ALHAMBRA survey used an elementary RGB blending process implemented as a series of linear mixing functions between the three color channels. Juan Conejero developed a script that implements smooth transitions between color channels and provides the required flexibility to fine tune the palette. We describe this work and some script results in the next section.

Synthetic Filter Sets

For RGB image synthesis with the 23 ALHAMBRA filters we construct a set of red, green and blue variable shape filters, which we define by the following equation:

This function returns the filter weight in the [0,1] range for a given wavelength x. The function parameters are as follows:

- c

- Central wavelength.

- w1

- Filter width, left side, in wavelength units.

- w2

- Filter width, right side, in wavelength units.

- s1

- Shape parameter, left side, 0 < s1.

- s2

- Shape parameter, right side, 0 < s2.

The shape parameters control the geometry of the filter's profile in terms of its kurtosis:

- s = 2

- Gaussian function (normal filter).

- s < 2

- Leptokurtic function (peaked filter).

- s > 2

- Mesokurtic function (flat filter).

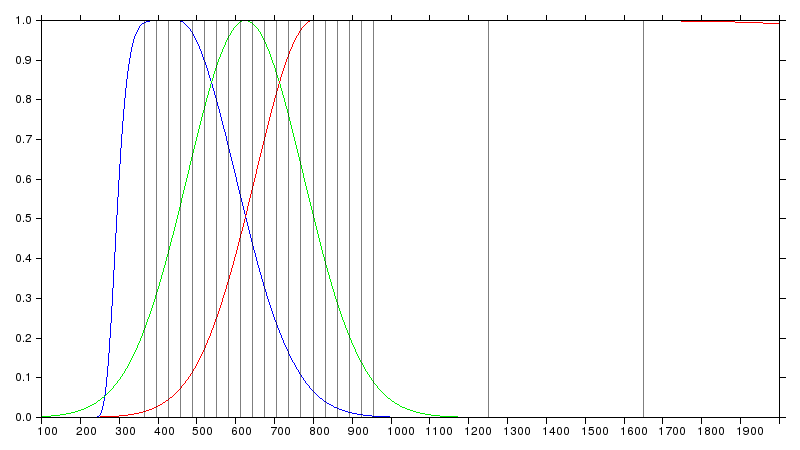

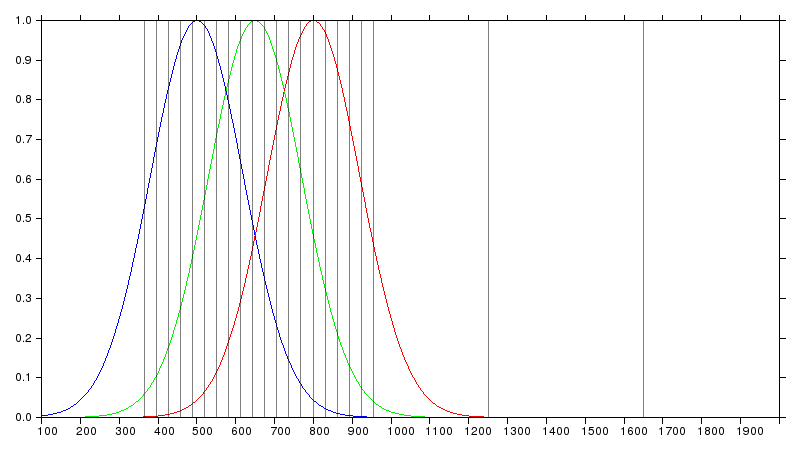

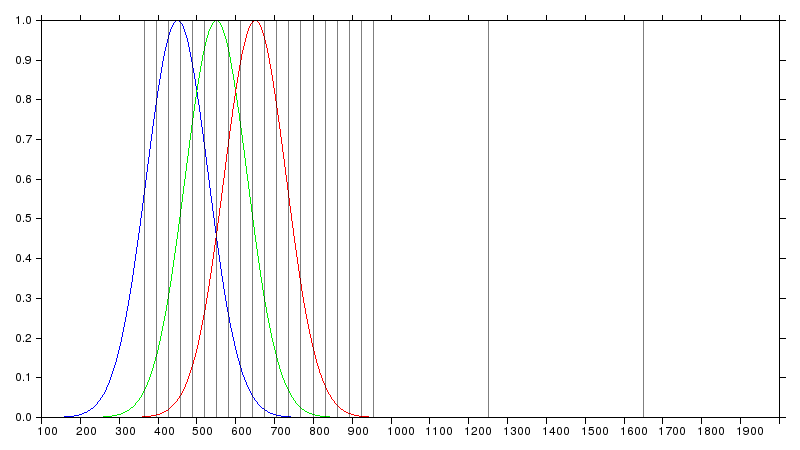

This function allows us to model each filter separately with the required accuracy and flexibility. Four filter sets have been defined in this example, which we have called extended, uniform, dense and visual, respectively for easy identification:

- Extended filter set

- This set aims at representing the whole data set available while providing a plausible visual color representation.

- Uniform filter set

- This set covers only the main data subset (the set of filters from 350 to 970 nm) uniformly with Gaussian filter shapes.

- Dense filter set

- Covers only the main data subset uniformly with flat filters.

- Visual filter set

- Covers only the main data subset partially with filters roughly centered at visual perception maxima.

The filter functions and their parameters are represented and tabulated in the figures below. In the graphs of these figures, the horizontal axis represents wavelength in nm and the vertical axis represents filter transmissivity. The vertical gray lines indicate the positions of the 23 ALHAMBRA filters (except the last K filter (2200 nm), which is not represented).

Crucial to achieve a good color rendition is having enough crossover between filters. Filter crossover is necessary to achieve rich and deep chroma components, where the red, green and blue components blend together to provide a continuous tonal representation without gaps.

The whole work, including image synthesis and graph generation, has been carried out in PixInsight with a JavaScript script that you can download at the end of this tutorial.

Extended filter set

| Filter | Central Wavelength (nm) | w1 | w2 | s1 | s2 |

|---|---|---|---|---|---|

| Blue | 450 | 150 | 150 | 8 | 2 |

| Green | 625 | 150 | 150 | 2 | 2 |

| Red | 800 | 150 | 2000 | 2 | 8 |

Uniform filter set

| Filter | Central Wavelength (nm) | w1 | w2 | s1 | s2 |

|---|---|---|---|---|---|

| Blue | 500 | 120 | 120 | 2 | 2 |

| Green | 650 | 120 | 120 | 2 | 2 |

| Red | 800 | 120 | 120 | 2 | 2 |

Dense filter set

| Filter | Central Wavelength (nm) | w1 | w2 | s1 | s2 |

|---|---|---|---|---|---|

| Blue | 450 | 120 | 120 | 6 | 6 |

| Green | 650 | 120 | 120 | 6 | 6 |

| Red | 850 | 120 | 120 | 6 | 6 |

Visual filter set

| Filter | Central Wavelength (nm) | w1 | w2 | s1 | s2 |

|---|---|---|---|---|---|

| Blue | 450 | 80 | 80 | 2 | 2 |

| Green | 550 | 80 | 80 | 2 | 2 |

| Red | 650 | 80 | 80 | 2 | 2 |

Image Comparisons

The following figures provide mouseover comparisons on selected crops of the full-size synthesized RGB images. The whole synthetic RGB images are also available for download at the end of this tutorial.

Mouse over:

Extended RGB filter set

Uniform RGB filter set

Dense RGB filter set

Visual RGB filter set

Mouse over:

Extended RGB filter set

Uniform RGB filter set

Dense RGB filter set

Visual RGB filter set

Mouse over:

Extended RGB filter set

Uniform RGB filter set

Dense RGB filter set

Visual RGB filter set

Conclusion

The characteristics and performance of the human vision system have defined color fidelity concepts in nature photography for a long time. However, this is not valid in deep-sky astrophotography. Fortunately, astrophotographers are blind photographers, and thus our freedom is in our inability to see what our camera is catching. Because it is a visual art neither guided nor limited by the eye, our photographic discipline is a singular space where concept and beauty meet and have a meaning in its most pure state.

This is particularly evident in the ALHAMBRA Survey project. A visual filter set—what is often called 'natural colors', whatever that means—is not appropriate to represent ALHAMBRA data. In fact, the RGB filter set that we have called 'visual set' is much better than what visual perception actually is, and even with this concession the result is very poor. In our opinion, the best filter configuration is the extended filter set because it is the one that maximizes information representation. This is coherent with the documentary criterion that defines our deep-sky color calibration methodology.

Downloadable Files

Use the following links to download the synthetic RGB images and the JavaScript script used to generate them in PixInsight.

Synthetic RGB Images

Flux-calibrated data

These files have been kindly provided by the ALHAMBRA team exclusively for this tutorial.

Legal note — The ALHAMBRA data here presented are to be used only as input for the exercises in this tutorial. For any other use, be it scientific, educational or commercial, you must contact the ALHAMBRA team at www.alhambrasurvey.com.

Script